The Strangler Pipeline introduced a Repeatable Reliable Process for start/stop, deployment, and database migration

Previous entries in the Strangler Pipeline series:

To start our Continuous Delivery journey at Sky Network Services, we created a cross-team working group and identified the following challenges:

- Slow platform build times. Developers used brittle, slow Maven/Ruby scripts to construct platforms of applications

- Different start/stop methods. Developers used a Ruby script to start/stop individual applications, server administrators used a Perl script to start/stop platforms of applications

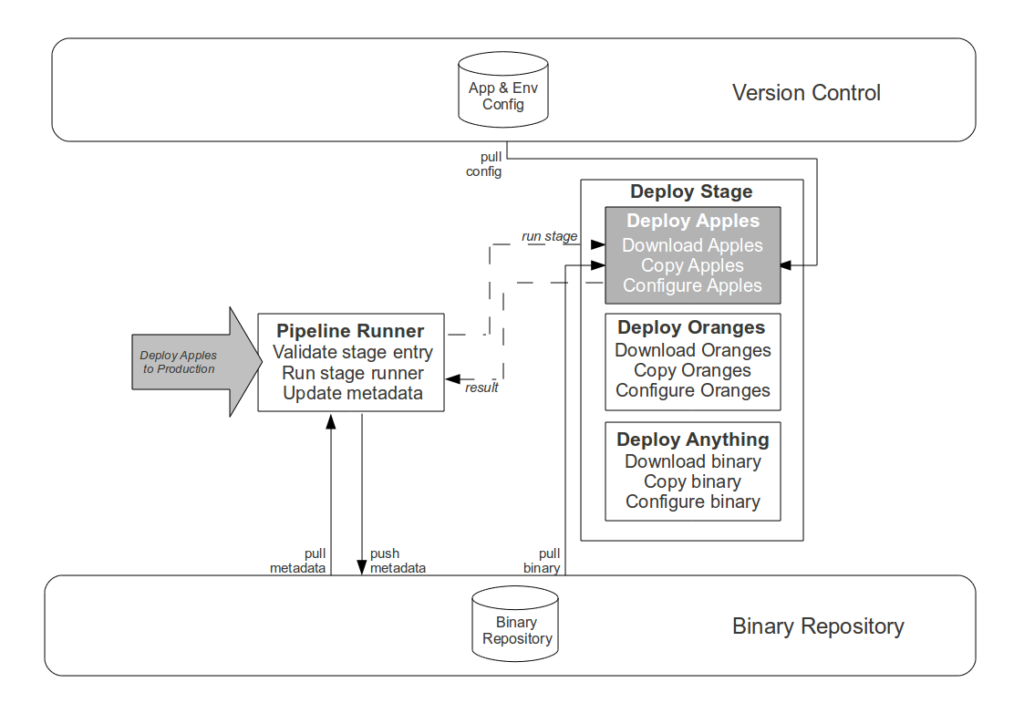

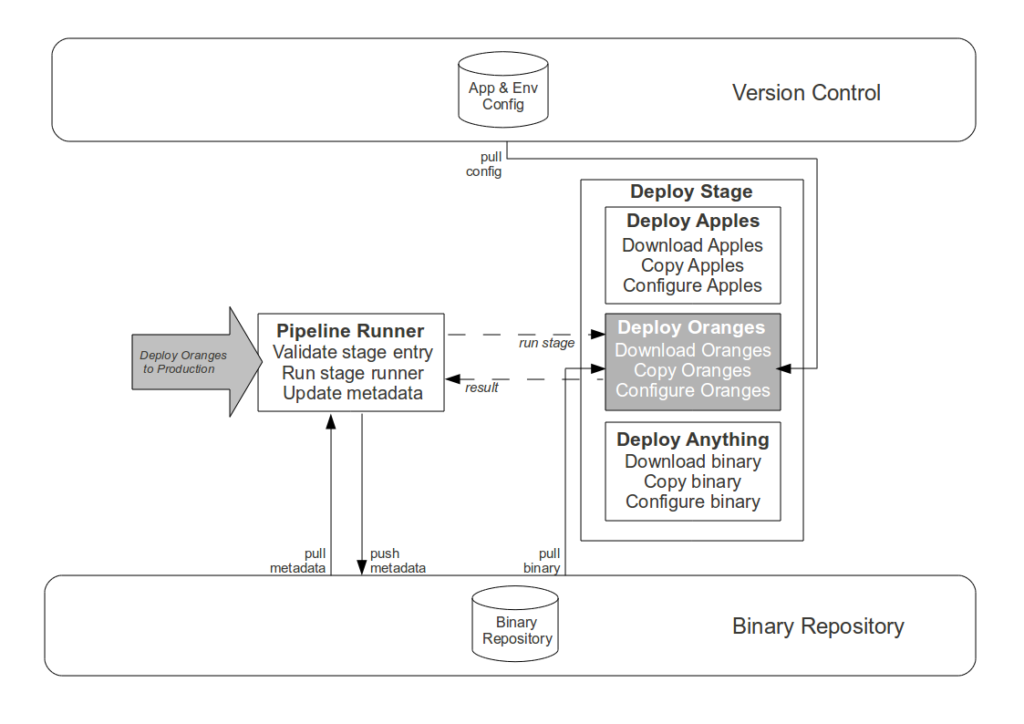

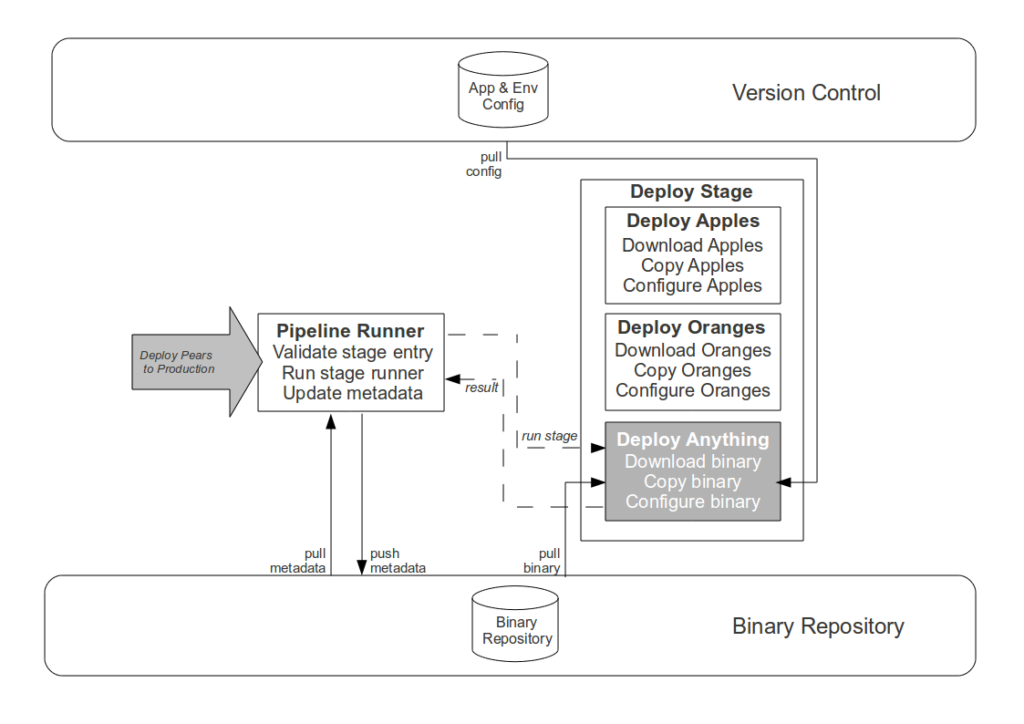

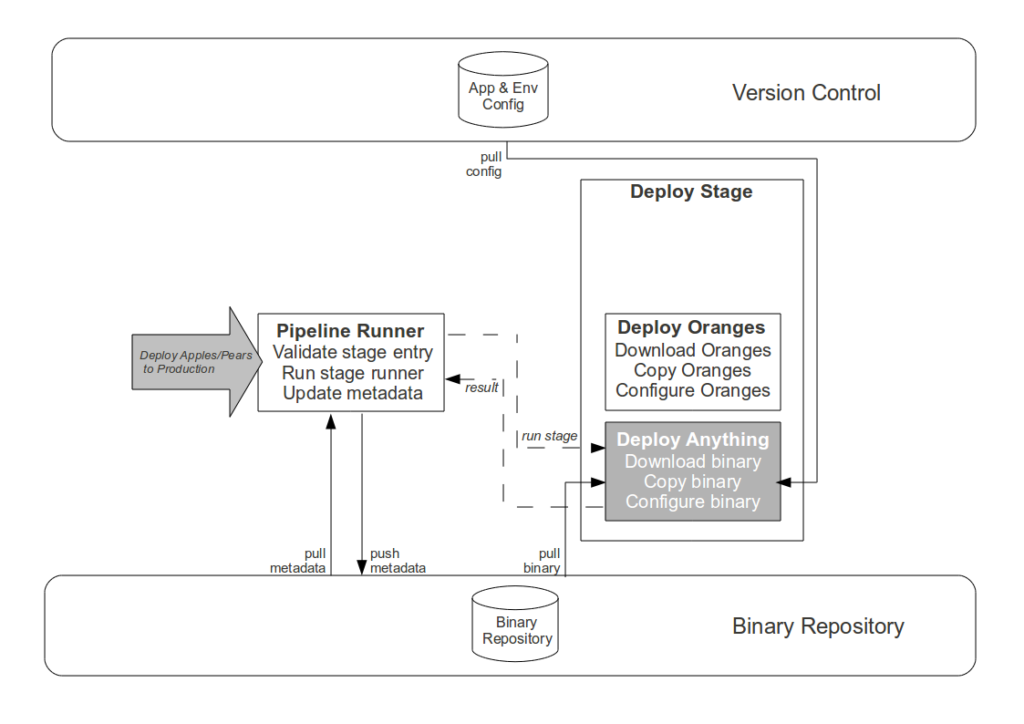

- Different deployment methods. Developers used a Ruby script to deploy applications, server administrators used a Perl script to deploy platforms of applications driven by a Subversion tag

- Different database migration methods. Developers used Maven to migrate applications, database administrators used a set of Perl scripts to migrate platforms of applications driven by the same Subversion tag

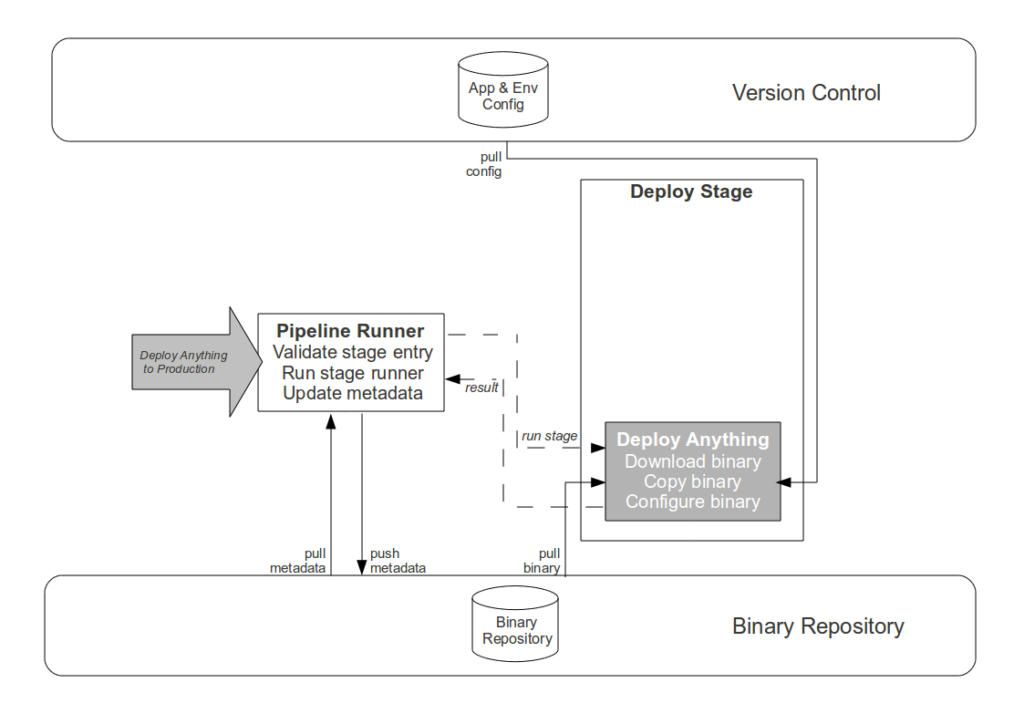

As automated release management is not our core business function, we initially examined a number of commercial and open-source off-the-shelf products such as ThoughtWorks Go, LinkedIn Glu, Ant Hill Pro, and Jenkins. However, despite identifying Go as an attractive option we reluctantly decided to build a custom pipeline. As our application estate already consisted of ~30 applications, we were concerned that the migration cost of introducing a new release management product would be disproportionately high. Furthermore, a well-established Continuous Integration solution of Artifactory Pro and a 24-agent TeamCity build farm was in situ, and to recommend discarding such a large financial investment with no identifiable upfront value would have been professional irresponsibility bordering upon consultancy. We listened to Bodart’s Law and reconciled ourselves to building a low-cost, highly scalable pipeline capable of supporting our applications in order of business and operational value.

With trust between Development and Operations at a low ebb, our first priority was to improve platform build times. With Maven used to build and release the entire application estate, the use of non-unique snapshots in conjunction with the Maven Release plugin meant that a platform build could take up to 60 minutes, recompiled the application binaries, and frequently failed due to transitive dependencies. To overcome this problem we decreed that using the Maven Release plugin violated Build Your Binaries Only Once, and we placed Maven in a bounded CI context of clean-verify. Standalone application binaries were built at fixed versions using the Axel Fontaine solution, and a custom Ant script was written to transform Maven snapshots into releasable artifacts. As a result of these changes platform build times shrank from 60 minutes to 10 minutes, improving release cadence and restoring trust between Development and Operations.

In the meantime, some of our senior Operations staff had been drawing up a new process for starting/stopping applications. While the existing release procedure of deploy -> stop -> migrate -> set current version -> start was compatible with the Decouple Deployment From Release principle, the start/stop scripts used by Operations were coupled to Apache Tomcat wrapper scripts due to prior use. The Operations team were aware that new applications were being developed for Jetty and Java Web Server, and collectively it was acknowledged that the existing model left Operations in the undesirable state of Responsibility Without Authority. To resolve this Operations proposed that all future application binaries should be ZIP archives containing zero-parameter start and stop shell scripts, and this became the first version of our Binary Interface. This strategy empowered Development teams to choose whichever technology was most appropriate to solve business problems, and decoupled Operations teams from knowledge of different start/stop implementations.

Although the Binary Interface proved over time to be successful, the understandable desire to decommission the Perl deployment scripts meant that early versions of the Binary Interface also called for deployment, database migration, and symlinking scripts to be provided in each ZIP archive. It was successfully argued that this conflated the need for binary-specific start/stop policies with application-neutral deploy/migrate policies, and as a result the latter responsibilities were earmarked for our pipeline.

Implementing a cross-team plan of action for database migration has proven far more challenging. The considerable amount of customer-sensitive data in our Production databases encouraged risk aversion, and there was a sizeable technology gap. Different Development teams used different Maven plugins and database administrators used a set of unfathomable Perl scripts run from a Subversion tag. That risk aversion and gulf in knowledge meant that a cross-team migration strategy was slow to emerge, and its implementation remains in progress. However, we did experience a Quick Win and resolve the insidious Subversion coupling when a source code move in Subversion caused an unnecessary database migration failure. A pipeline stage was introduced to deliver application SQL from Artifactory to the Perl script source directories on the database servers. While this solution did not provide full database migration, it resolved an immediate problem for all teams and better positioned us for full database migration at a later date.

With the benefit of hindsight, it is clear that the above tooling discrepancies, disparate release processes, and communications issues were rooted in Development and Operations historically working in separate silos, as forewarned by Conway’s Law. These problems were solved by Development and Operations teams coming together to create and implement cross-team policies, and this formed a template for future co-operation on the Strangler Pipeline.