Should an organisation in peril start its journey towards IT enabled growth by investing in IT delivery first, or product development? Should it Build The Thing Right with Continuous Delivery first, or Build The Right Thing with Lean Product Development?

Introduction

The software revolution has caused a profound economic and technological shift in society. To remain competitive, organisations must rapidly explore new product offerings as well as exploiting established products. New ideas must be continuously validated and refined with customers, if product/market fit and repeatable sales are to be found.

That is extremely difficult when an organisation has the 20th century, pre-Internet IT As A Cost Centre organisational model. There will be a functionally segregated IT department, that is accounted for as a cost centre that can only incur costs. There will be an annual plan of projects, each with its scope, resources, and deadlines fixed in advance. IT delivery teams will be stuck in long-term Discontinuous Delivery, and IT executives will only be incentivised to meet deadlines and reduce costs.

If product experimentation is not possible and product/market fit for established products declines, overall profitability will suffer. What should be the first move of an organisation in such perilous conditions? Should it invest first in Lean Product Development to Build The Right Thing, and uncover new product offerings as quickly as possible? Or should it invest in Continuous Delivery to Build The Thing Right first, and create a powerful growth engine for locating future product/market fit?

Build The Thing Right first

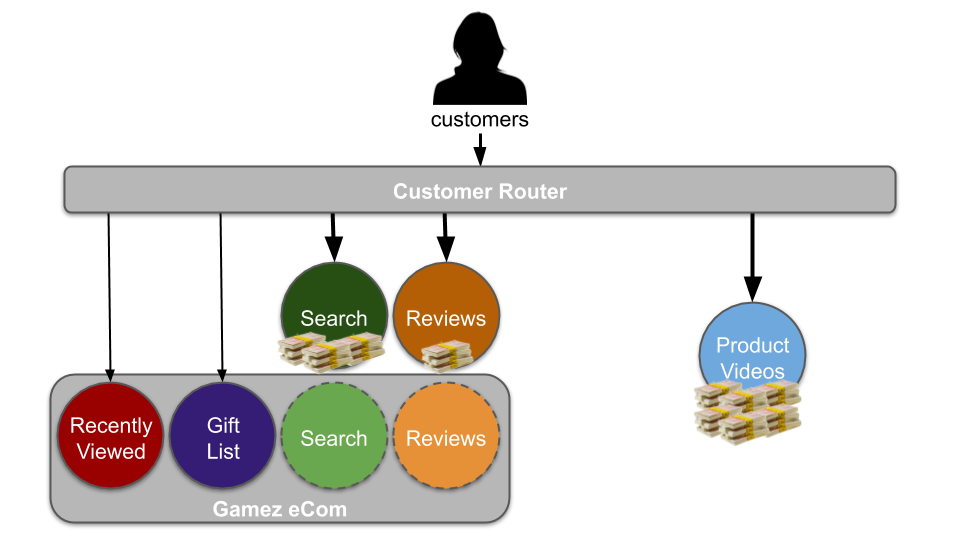

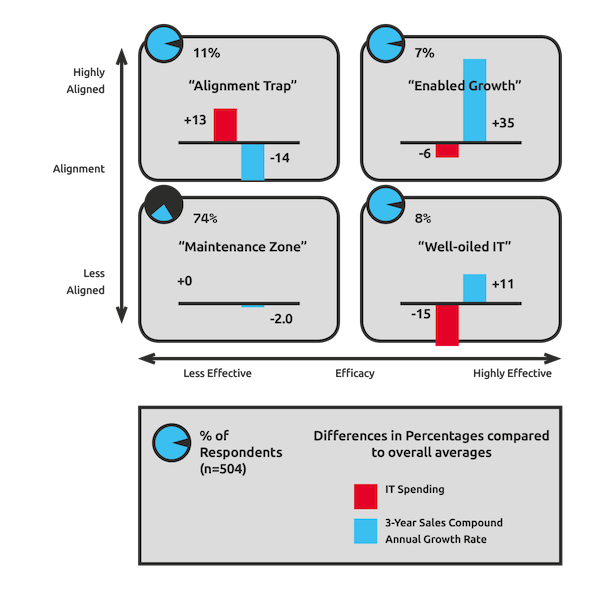

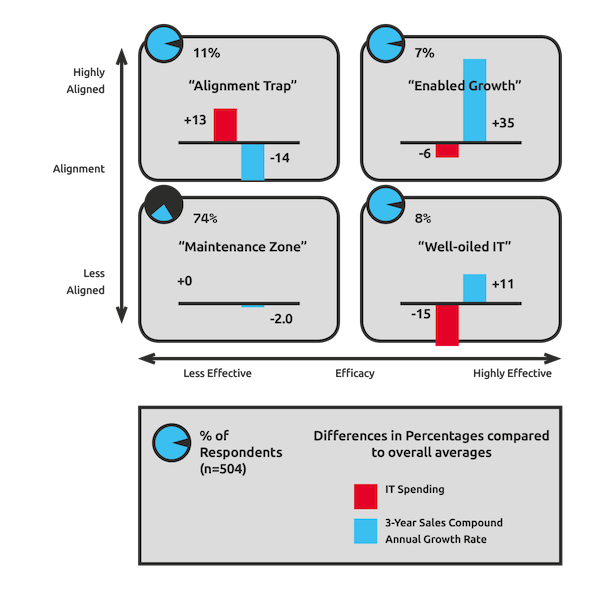

In 2007, David Shpilberg et al published a survey of ~500 executives in Avoiding the Alignment Trap in IT. 74% of respondents reported an under-performing, undervalued IT department, unaligned with business objectives. 15% of respondents had an effective IT department, with variable business alignment, below average IT spending, and above average sales growth. Finally, 11% were in a so-called Alignment Trap, with negative sales growth despite above average IT spending and tight business alignment.

Avoiding the Alignment Trap in IT (Shpilberg et al) – source praqma.com

Shpilberg et al report “general ineffectiveness at bringing projects in on time and on the dollar, and ineffectiveness with the added complication of alignment to an important business objective”. The authors argue organisations that prematurely align IT with business objectives will fall into an Alignment Trap. They conclude organisations should build a highly effective IT department first, and then align IT projects to business objectives. In other words, Build The Thing Right before trying to Build The Right Thing.

The conclusions of Avoiding the Alignment Trap in IT are naive, because they ignore the implications of IT As A Cost Centre. An organisation with ineffective IT will undoubtedly suffer if it increases business alignment and investment in IT as is. However, that is a consequence of functionally siloed product and IT departments, and the antiquated project delivery model tied to IT As A Cost Centre. Projects will run as a Large Batch Death Spiral for months without customer feedback, and invariably result in a dreadful waste of time and money. When Shpilberg et al define success as “delivered projects with promised functionality, timing, and cost”, they are measuring manipulable project outputs, rather than customer outcomes linked to profitability.

Build The Right Thing first

It is hard to Build The Right Thing without first learning to Build The Thing Right, but it is possible. If flow is improved through co-dependent product and IT teams, the negative consequences of IT As A Cost Centre can be reduced. New revenue streams can be unlocked and profitability increased, before a full Continuous Delivery programme can be completed.

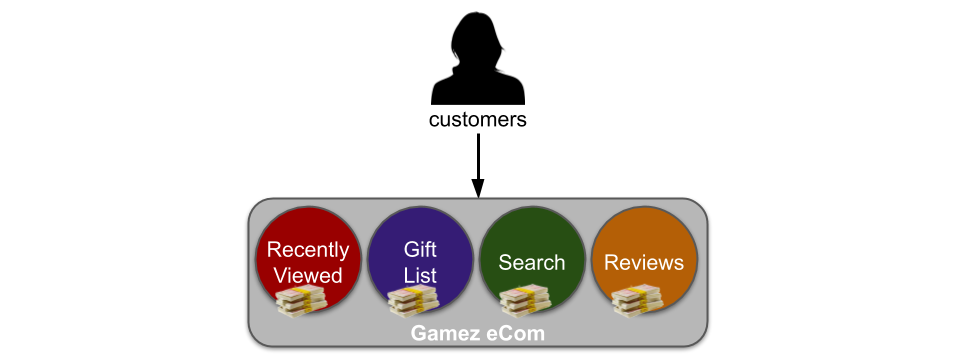

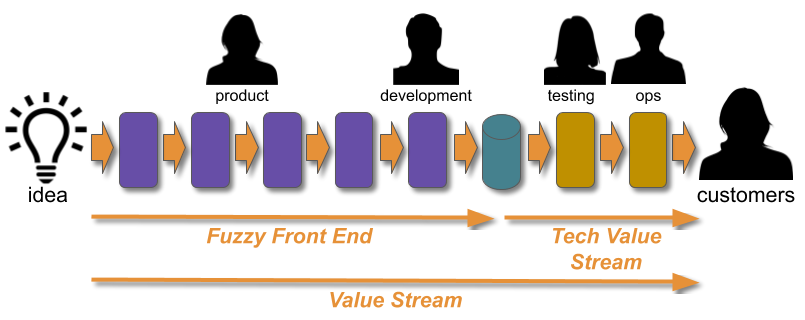

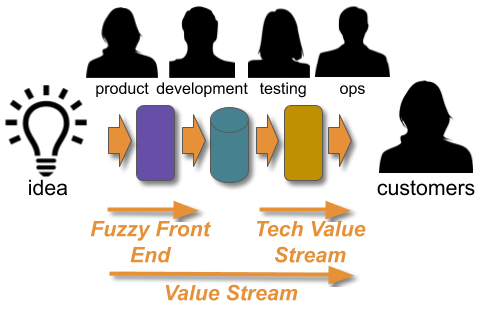

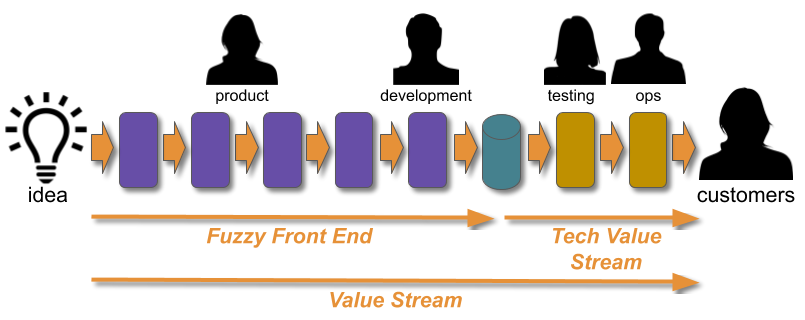

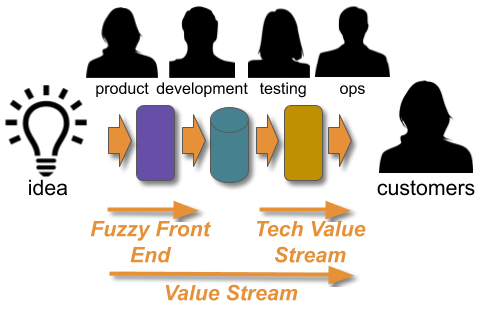

An organisation will have a number of value streams to convert ideas into product offerings. Each value stream will have a Fuzzy Front End of product and development activities, and a technology value stream of build, testing, and operational activities. The time from ideation to customer is known as cycle time.

Flow in a value stream can be improved by:

- understanding the flow of ideas

- reducing batch sizes

- quantifying value and urgency

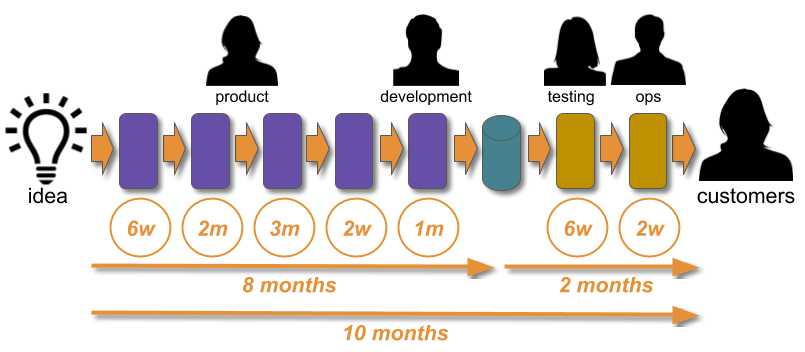

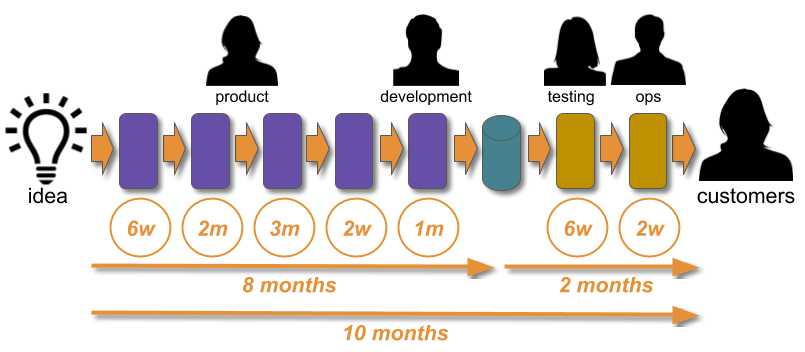

The flow of ideas can be understood by conducting a Value Stream Mapping with senior product and IT stakeholders. Visualising the activities and teams required for ideas to reach customers will identify an approximate cycle time, and the sources of waste that delay feedback. A Value Stream Mapping will usually uncover a shocking amount of rework and queue times, with the majority in the Fuzzy Front End.

For example, a Value Stream Mapping might reveal a 10 month cycle time, with 8 months spent on ideas bundled up as projects in the Fuzzy Front End, and 2 months spent on IT in the technology value stream. Starting out with Build The Thing Right would only tackle 20% of cycle time.

Reducing batch sizes means unbundling projects into separate features, reducing the size of features, and using Work In Process (WIP) Limits across product and IT teams. Little’s Law guarantees distilling projects into small, per-feature deliverables and restricting in-flight work will result in shorter cycle times. In Lean Enterprise, Jez Humble, Joanne Molesky, and Barry O’Reilly describe reducing batch sizes as “the most important factor in systemically increasing flow and reducing variability”.

Quantifying value and urgency means working with product stakeholders to estimate the Cost Of Delay of each feature. Cost Of Delay is the economic benefit a feature could generate over time, if it was available immediately. Considering how time will affect how a feature might increase revenue, protect revenue, reduce costs, and/or avoid costs is extremely powerful. It encourages product teams to reduce cycle time by shrinking batch sizes and eliminating Fuzzy Front End activities. It uncovers shared assumptions and enables better trade-off decisions. It creates a shared sense of urgency for product and IT teams to quickly deliver high value features. As Don Reinertsen says in the The Principles of Product Development Flow, “if you only measure one thing, measure the Cost Of Delay”.

For example, a manual customer registration task generates £100Kpa of revenue, and is performed by one £50Kpa employee. The economic benefit of automating that task could be calculated as £100Kpa of revenue protection and £50Kpa of cost reduction, so £5.4Kpw is lost while the feature does not exist. If there is a feature with a Cost Of Delay greater than £5.4Kpw, the manual task should remain.

Build The Right Thing case study – Maersk Line

Black Swan Farming – Maersk Line by Joshua Arnold and Özlem Yüce demonstrates how an organisation can successfully Build The Right Thing first, by understanding the flow of ideas, reducing batch sizes, and quantifying value and urgency. In 2010, Maersk Line IT was a £60M cost centre with 20 outsourced development teams. Between 2008 and 2010 the median cycle time in all value streams was 150 days. 62% of ~3000 requirements in progress were stuck in Fuzzy Front End analysis.

Arnold and Yüce were asked to deliver more value, flow, and quality for a global booking system with a median cycle time of 208 days, and quarterly production releases in IT. They mapped the value stream, shrank features down to the smallest unit of deliverable value, and introduced Cost Of Delay into product teams alongside other Lean practices.

After 9 months, improvements in Fuzzy Front End processes resulted in a 48% reduction in median cycle time to 108 days, an 88% reduction in defect count, and increased customer satisfaction. Furthermore, using Cost Of Delay uncovered 25% of requirements were a thousand times more valuable than the alternatives, which led to a per-feature return on investment six times higher than the Maersk Line IT average. By applying the same Lean principles behind Continuous Delivery to product development prior to additional IT investment, Arnold and Yüce achieved spectacular results.

Build The Right Thing and Build The Thing Right

If an organisation in peril tries to Build The Right Thing first, it risks searching for product/market fit without the benefits of fast customer feedback. If it tries to Build The Thing Right first, it risks spending time and money on Continuous Delivery without any tangible business benefits.

An organisation should instead aim to Build The Right Thing and Build The Thing Right from the outset. A co-evolution of product development and IT delivery capabilities is necessary, if an organisation is to achieve the necessary profitability to thrive in a competitive market.

This approach is validated by Dr. Nicole Forsgren et al in Accelerate. Whereas Avoiding The Alignment Trap In IT was a one-off assessment of business alignment in IT As A Cost Centre, Accelerate is a multi-year, scientifically rigorous study of thousands of organisations worldwide. Interestingly, Lean product development is modelled as understanding the flow of work, reducing batch sizes, incorporating customer feedback, and team empowerment. The data shows:

- Continuous Delivery and Lean product development both predict superior software delivery performance and organisational culture

- Software delivery performance and organisational culture both predict superior organisational performance in terms of profitability, productivity, and market share

- Software delivery performance predicts Lean product development

On the reciprocal relationship between software delivery performance and Lean product development, Dr. Nicole Forsgren et al conclude “the virtuous cycle of increased delivery performance and Lean product management practices drives better outcomes for your organisation”.

Exapting product development and technology

An organisational ambition to Build The Right Thing and Build The Thing Right needs to start with the executive team. Executives need to recognise an inability to create new offerings or protect established products equates to mortal peril. They need to share a vision of success with the organisation that articulates the current crisis, describes a state of future prosperity, and injects urgency into day-to-day work.

The executive team should introduce the Improvement Kata into all levels of the organisation. The Improvement Kata encourages problem solving via experimentation, to proceed towards a goal in iterative, incremental steps amid ambiguities, uncertainties, and difficulties. It is the most effective way to manage a gradual co-emergence of Lean Product Development and Continuous Delivery.

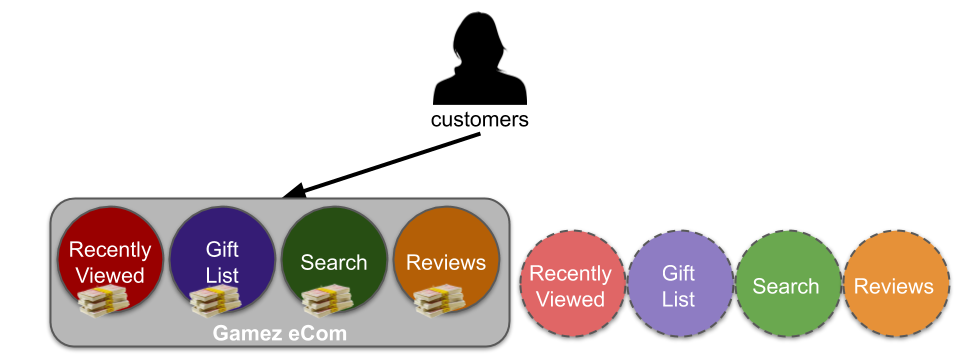

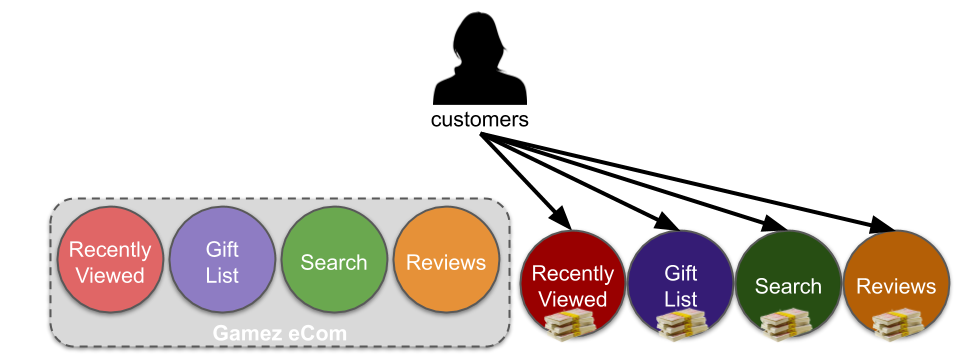

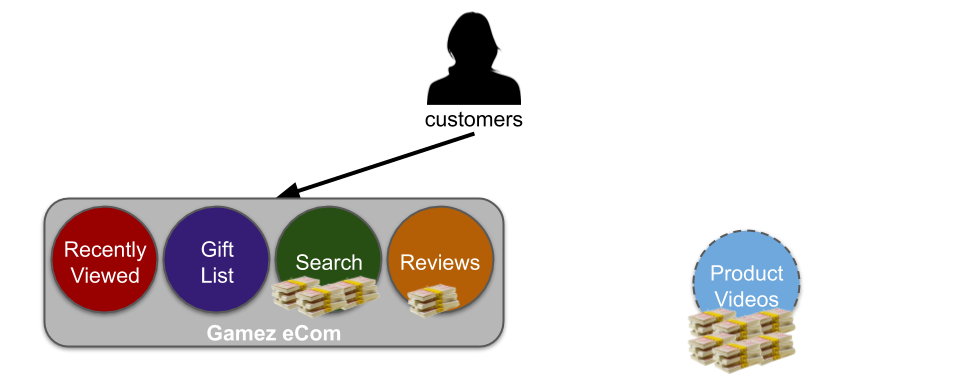

Experimentation with organisational change should include a transition from IT As A Cost Centre to IT As A Business Differentiator. This means technology staff moving from the IT department to work in long-lived, outcome-oriented teams in one or more product departments, which are accounted for as profit centres and responsible for their own investment decisions. One way to do this is to create a Digital department of co-located product and technology staff, with shared incentives to create new product offerings. Handoffs and activities in value streams will be dramatically reduced, resulting in much faster cycle times and tighter customer feedback loops.

Instead of an annual budget and a set of fixed scope projects, there needs to be a rolling budget that funds a rolling plan linked to desired outcomes and strategic business objectives. The scope, resources, and deadlines of the rolling plan should be constantly refined by validated learnings from customers, as delivery teams run experiments to find problem/solution fit and problem/market for a particular business capability.

Those delivery teams should be cross-functional, with all the necessary personnel and skills to apply Design Thinking and Lean principles to problem solving. This should include understanding the flow of ideas, reducing batch sizes, and the quantifying value and urgency. As Lean Product Development and Continuous Delivery capabilities gradually emerge, it will become much easier to innovate with new product offerings and enhance established products.

It might take months or years of investment, experimentation, and disruption before an organisation has adopted Lean Product Development and Continuous Delivery across all its value streams. It is important to protect delivery expectations and staff welfare by making changes one value stream at a time, by looking for existing products or new product offerings stuck in Discontinuous Delivery.

Acknowledgements

Thanks to Emily Bache, Ozlem Yuce, and Thierry de Pauw for reviewing this article.

Further Reading

- Lean Software Development by Mary and Tom Poppendieck

- Designing Delivery by Jeff Sussna

- The Essential Drucker by Peter Drucker

- Measuring Continuous Delivery by Steve Smith

- The Cost Centre Trap by Mary Poppendieck

- Making Work Visible by Dominica DeGrandis