Pipelining inter-dependent applications as uber-artifacts is unscalable

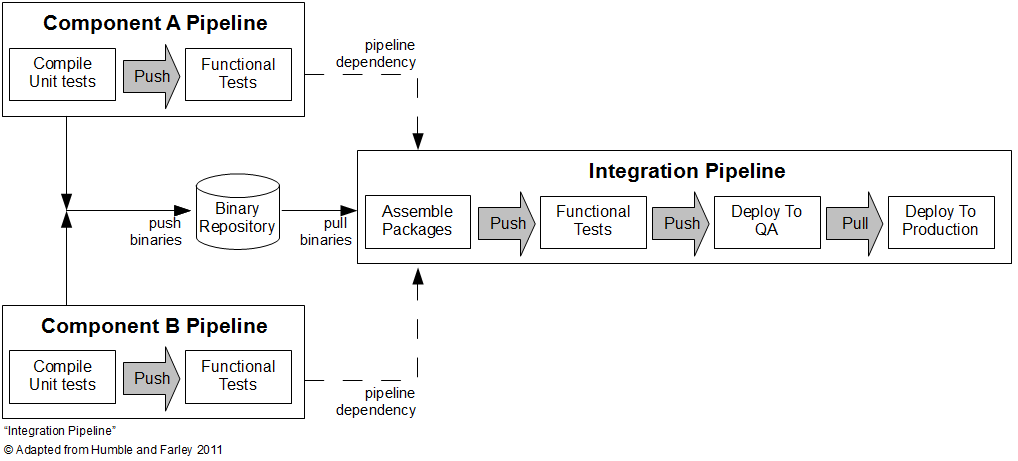

Achieving the Continuous Delivery of an application is a notable feat in any organisation, but how do we build on such success and pipeline more complex, inter-dependent applications? In Continuous Delivery, Dave Farley and Jez Humble suggest an Integration Pipeline architecture as follows:

In an Integration Pipeline, the successful commit of a set of related application artifacts triggers their packaging as a single releasable artifact. That artifact then undergoes the normal test-release cycle, with an increased focus upon fast feedback and visibility of binary status.

Although Eric Minick’s assertion that this approach is “broken for complex architectures” seems overly harsh, it is true that its success is predicated upon the quality of the tooling, specifically the packaging implementation.

For example, a common approach is the Uber-Artifact (also known as Build Of Builds or Mega Build), where an archive is created containing the application artifacts and a version manifest. This suffers from a number of problems:

- Inconsistent release mechanism. The binary deploy process (copy) differs from the uber-binary deploy process (copy and unzip)

- Duplicated artifact persistence. Committing an uber-artifact to theartifact repository re-commits the constituent artifacts within the archive

- Lack of version visibility. The version manifest must be extracted from the uber-artifact to determine constituent versions

- Non-incremental release mechanism. An uber-artifact cannot easily diff constituent versions and must be fully extracted to the target environment

Of the above, the most serious problem is the barrier to incremental releases, as it directly impairs pipeline scalability. As the application estate grows over time in size and/or complexity, an inability to identify and skip the re-release of unchanged application artifacts can only increase cycle time.

Returning to the intent of the Integration Pipeline architecture, we simply require a package that expresses the relationship between the related application artifacts. In an uber-artifact, the value resides in the version manifest – so why not make that the artifact?

You’ve rather put words into our mouth there. What we actually say is (p361) “The first stage of the integration pipeline should create a package (or packages) suitable for deployment by composing the appropriate collections of binaries.”

Admittedly that’s a short and ambiguous sentence. But what we were trying to imply is that if, for example, your app is installed as an RPM or set of RPMs, this is where you might create them and the various dependency metadata in them to ensure the correct versions get installed at runtime.

The main thrust of Eric Minick’s post, as I understand it, is that you shouldn’t create an “uber build” and instead tag the various binaries – I believe the key sentence is “But rather than retrieve and assemble those related components into a final application in as part of a super build, we instead, use a release set to logically collect the component versions.” I think that’s an absolutely valid approach, partly for the reasons you describe. We don’t address which approach you should take or indeed discuss this issue at all in the book.

I should point out though that a good counterexample to this approach is Facebook, who do appear to create an uber-build: http://techcrunch.com/2011/05/30/facebook-source-code/

Eric’s approach of a “release set” appears to be similar to my approach, it’ll be interesting to see how and where they differ once I’ve written it up.

I’d forgotten about the Facebook counter-example, and it’s a good one. My understanding is that they release an enormous uber-binary and manage a ridiculous number of feature toggles. It clearly works for them, but I’d interested to hear more – on their versioning strategy, for example.

Looking forward to the write-up Steve.

From what I’ve seen so-far it looks like you may have a file with the manifest. We’re using a relational database to track our “snapshots”. Seems like we may be seeing some philosophical convergence though.

It’s needed. Deployment time dependencies are a mess out there.

Hi Eric, I agree that architectural convergence is likely. Any quibbling will likely be over implementation. Getting some information out there is important, there aren’t many CD experience reports that venture beyond single application pipelines.