Continuous Delivery enables batch size reduction

Continuous Delivery aims to overcome the large delivery costs traditionally associated with releasing software, and in The Principles of Product Development Flow Don Reinertsen describes delivery cost as a function of transaction cost and holding cost. While transaction costs are incurred by releasing a product increment, holding costs are incurred by not releasing a product increment and are proportional to batch size – the quantity of in flight value-adding features, and the unit of work within a value stream.

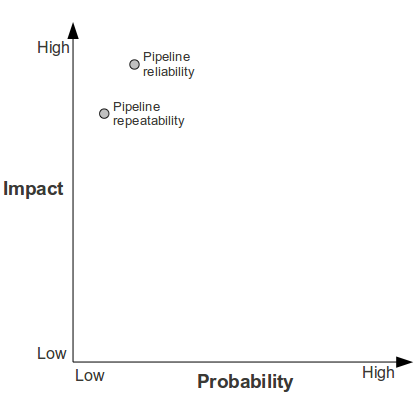

The above graph shows that a reduction in transaction cost alone will not dramatically impact delivery cost without a corresponding reduction in batch size, and this mirrors our assertion that automation alone cannot improve cycle time. However, Don also states that “the primary controllable factor that enables small batches is low transaction cost per batch“, and by implementing Continuous Delivery we can minimise transaction costs and subsequently release smaller change sets more frequently, obtaining the following benefits:

- Improved cycle time – smaller change sets reduce queued work (e.g. pending deployments), and due to Little’s Law cycle time is decreased without constraining demand (e.g. fewer deployments) or increasing capacity (e.g. more deployment staff)

- Improved flow – smaller change sets reduce the probability of unpredictable, costly value stream blockages (e.g. multiple deployments awaiting signoff)

- Improved feedback – smaller change sets shrink customer feedback loops, enabling product development to be guided by Validated Learning (e.g. measure revenue impact of new user interface)

- Improved risk – smaller change sets reduce the quantity of modified code in each release, decreasing both defect probability (i.e. less code to misbehave) and defect cost (i.e. less complex code to debug and fix)

- Improved overheads – smaller change sets reduce transaction costs by encouraging optimisations, with more frequent releases necessitating faster tooling (e.g. multi-core processors for Continuous Integration) and streamlined processes (e.g. enterprise-grade test automation)

- Improved efficiency – smaller change sets reduce waste by narrowing defect feedback loops, and decreasing the probability of defective code harming value-adding features (e.g. user interface change dependent upon defective API call)

- Improved ownership – smaller change sets reduce the diluted sense of responsibility in large releases, increasing emotional investment by limiting change owners (e.g. single developer responsible for change set, feedback in days not weeks)

Despite the business-facing value proposition of Continuous Delivery, there may be no incentive from the business team to increase release cadence. However, the benefits of releasing smaller change sets more frequently – improved feedback, risk, overheards, efficiency, and ownership – are also operationally advantageous, and this should be viewed as an opportunity to educate those unaware of the power of batch size reduction. Such a scenario is similar to the growth of Continuous Integration a decade ago, when the operational benefits of frequently integrating smaller code changes overcame the lack of business incentive to increase the rate of source code integration.

Business requirements = minimum release cadence

Operational requirements = maximum release cadence

A persistent problem with increasing release cadence is Eric Ries’ assertion that “the benefits of small batches are counter-intuitive“, and in organisations long accustomed to a high delivery cost it seems only natural to artificially constrain demand or increase capacity to smooth the value stream. For example, our organisation has a 28 day cycle time of which 7 days are earmarked for release testing. In this situation, decreasing cadence to a 36 day cycle time appears less costly than increasing cadence to a 14 day cycle time, as release testing will ostensibly decrease to 19% of our cycle time rather than increase to 50%. However, this ignores both the holding cost of constraining demand and the long-unimplemented optimisations we would be compelled to introduce to achieve a lower release cadence (e.g. increased level of test automation).

Improving cycle time is not just about using Continuous Delivery to reduce transaction costs – we must also be courageous, and release more with less.

![Economic Batch Size [Reinertsen]](https://www.stevesmith.tech/wp-content/uploads/2013/03/Economic-Batch-Size-Reinertsen-1024x852.jpg)