The Strangler Pipeline is grounded in autonomation

Previous entries in the Strangler Pipeline series:

- The Strangler Pipeline – Introduction

- The Strangler Pipeline – Challenges

- The Strangler Pipeline – Scaling Up

- The Strangler Pipeline – Legacy and Greenfield

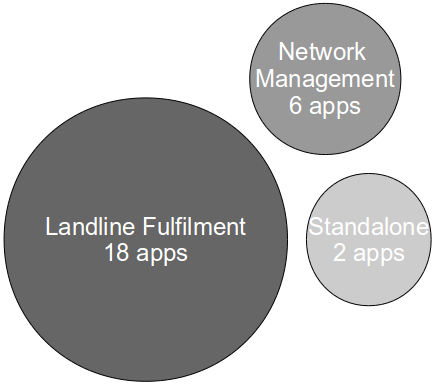

The introduction of Continuous Delivery to an organisation is an exciting opportunity for Development and Operations to Automate Almost Everything into a Repeatable Reliable Process, and at Sky Network Services we aspired to emulate organisations such as LMAX, Springer, and 7Digital by building a fully automated Continuous Delivery pipeline to manage our Landline Fulfilment and Network Management platforms. We began by identifying our Development and Operations stakeholders, and establishing a business-facing programme to automate our value stream. We emphasised to our stakeholders that automation was only a step towards our end goal of improving upon our cycle time of 26 days, and that the Theory Of Constraints warns that automating the wrong constraint will have little or no impact upon cycle time.

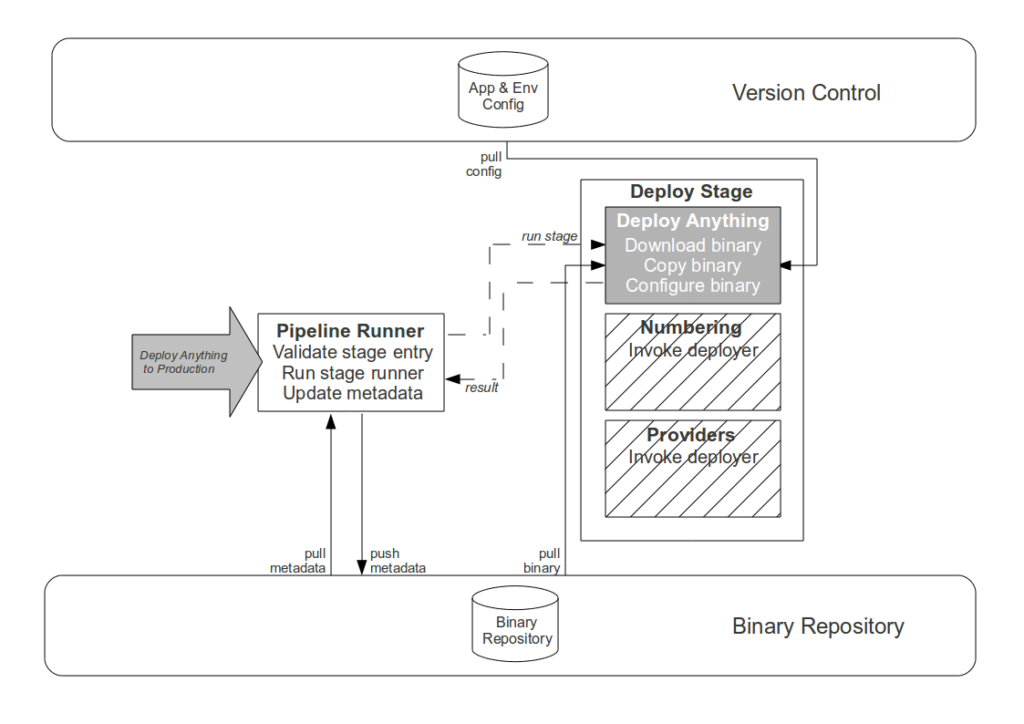

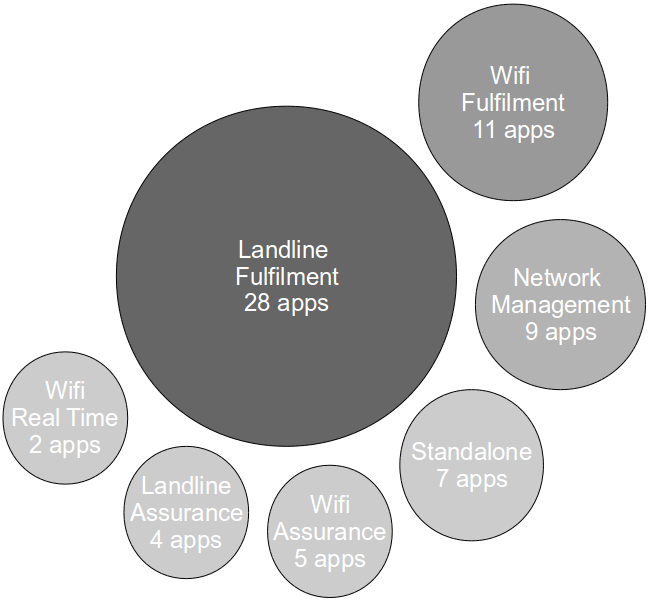

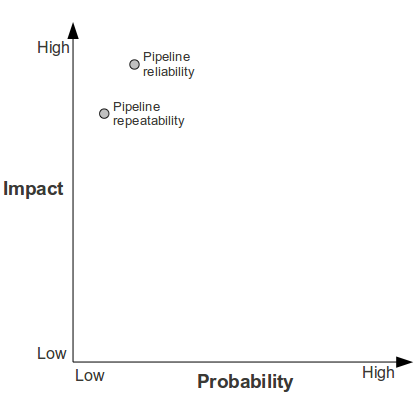

Our determination to value cycle time optimisation above automation in the Strangler Pipeline was soon justified by the influx of new business projects. The unprecedented growth in our application estate led to a new goal of retaining our existing cycle time while integrating our greenfield application platforms, and as our core business domain is telecommunications not Continuous Delivery we concluded that fully automating our pipeline would not be cost-effective. By following Jez Humble and Dave Farley’s advice to “optimise globally, not locally”, we focussed pipeline stakeholder meetings upon value stream constraints and successfully moved to an autonomation model aimed at stakeholder-driven optimisations.

Described by Taiichi Ohno as one of “the two pillars of the Toyota Production System“, autonomation is defined as automation with a human touch. It refers to the combination of human intelligence and automation where full automation is considered uneconomical. While the most prominent example of autonomation is problem detection at Toyota, we have applied autonomation within the Strangler Pipeline as follows:

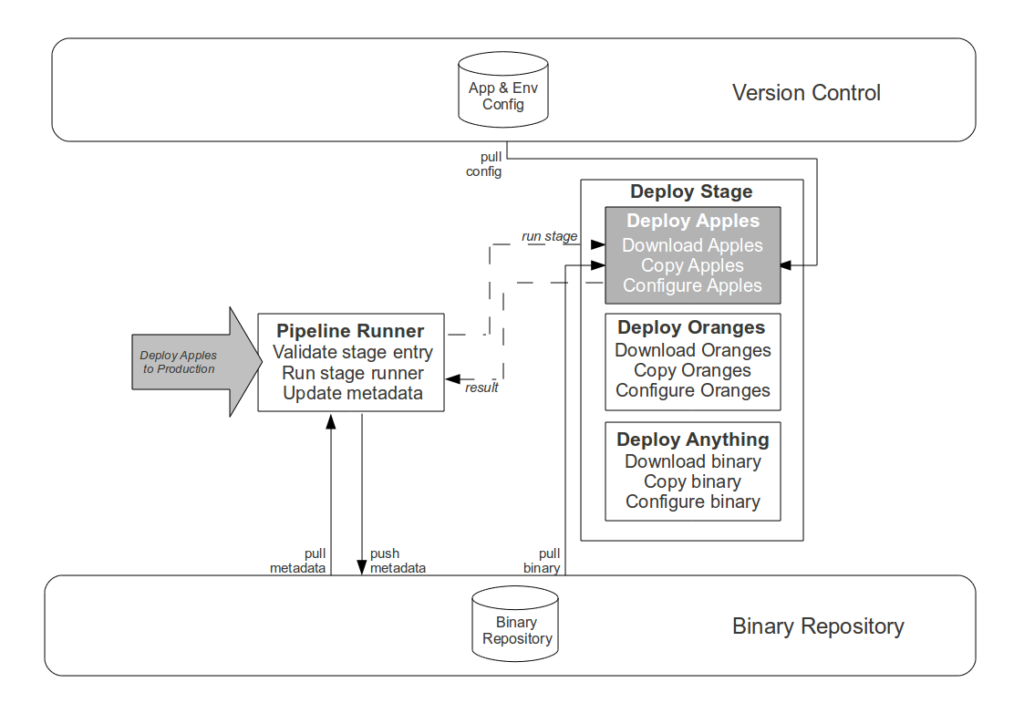

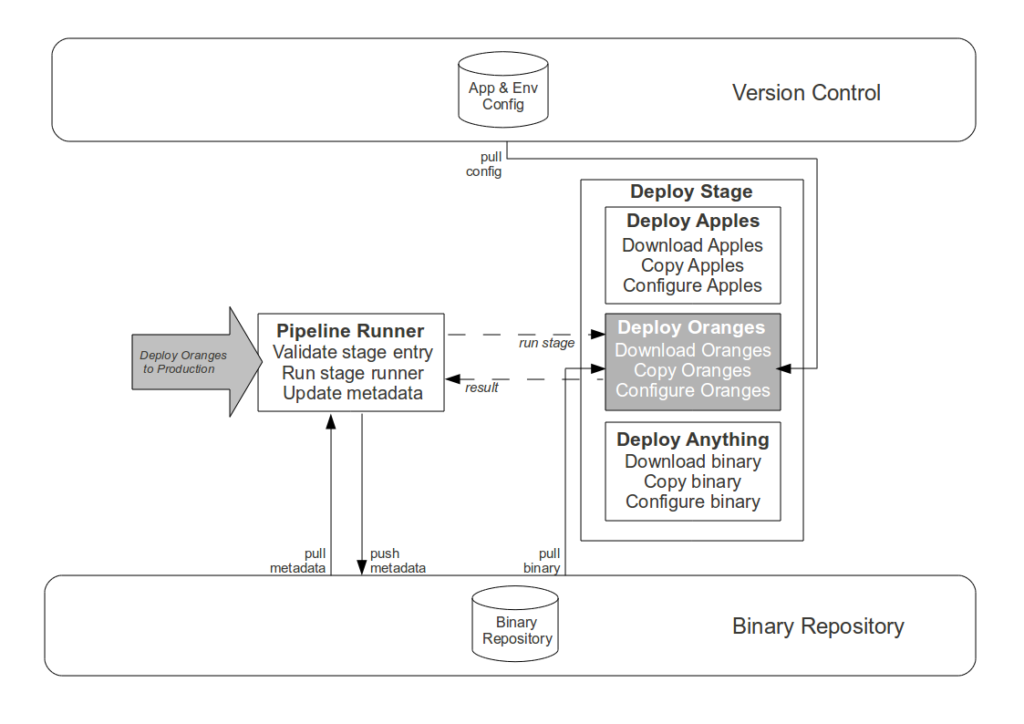

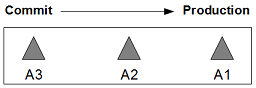

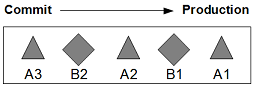

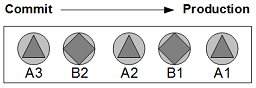

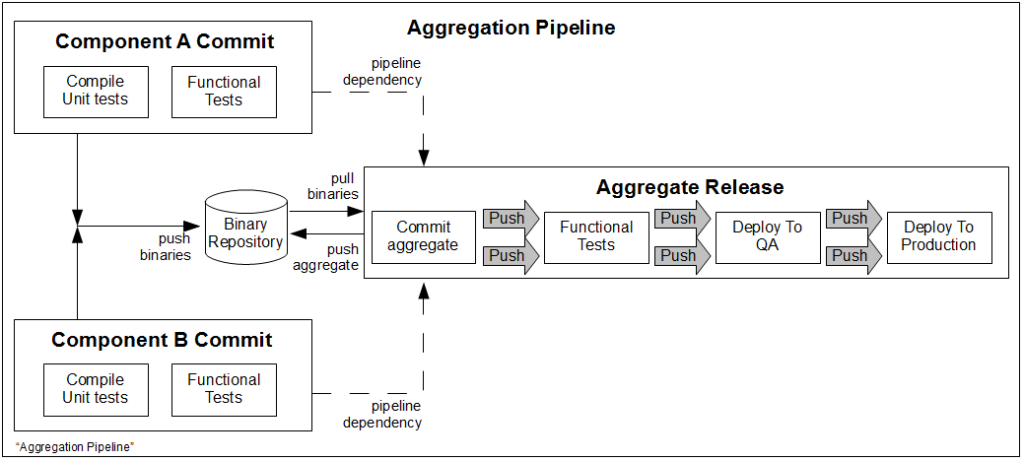

- Commit stage. While automating the creation of an aggregate artifact when a constituent application artifact is committed would reduce the processing time of platform creation, it would have zero impact upon cycle time and would replace Operations responsibility for release versioning with arbitrary build numbers. Instead the Development teams are empowered to track application compatibilities and create aggregate binaries via a user interface, with application versions selectable in picklists and aggregate version numbers auto-completed in order to reduce errors.

- Failure detection and resolution. Although creating an automated rollback or self-healing releases would harden the Strangler Pipeline, we agreed that such a solution was not a constraint upon cycle time and would be costly to implement. When a pipeline failure occurs it is recorded in the metadata of the application artifact, and we Stop The Line to prevent further use until a human has logged onto the relevant server(s) to diagnose and correct the problem.

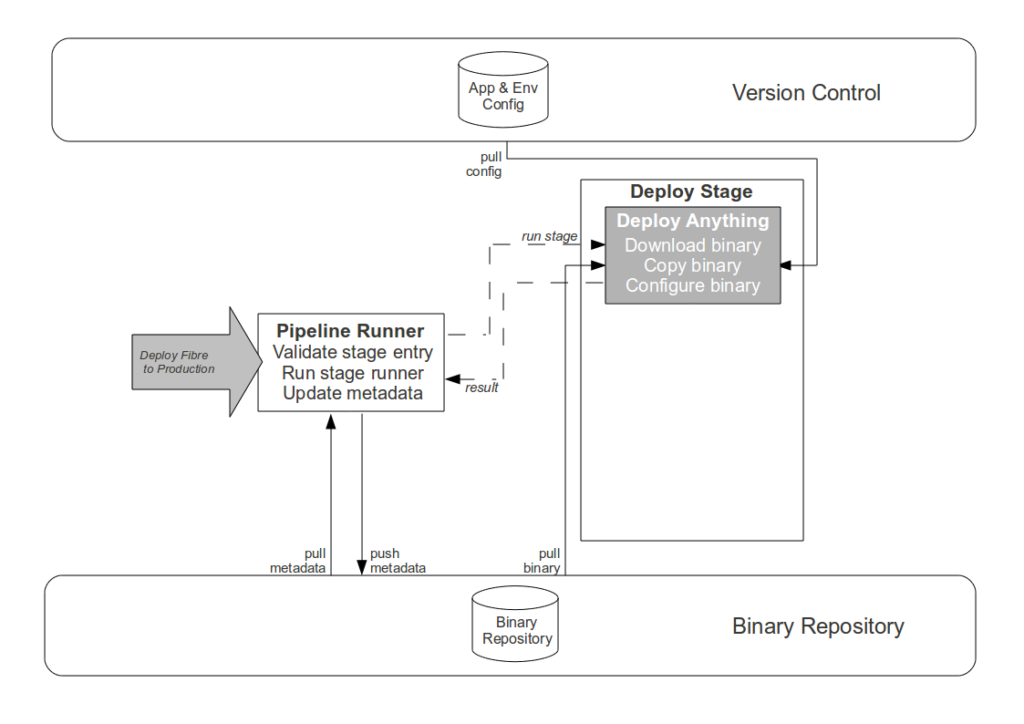

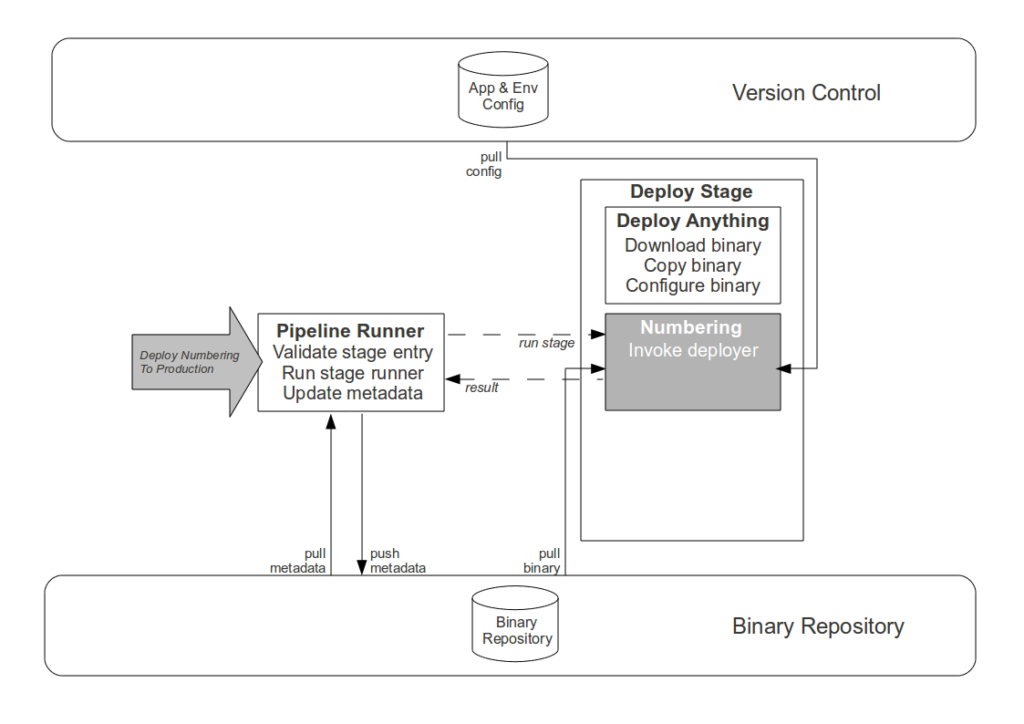

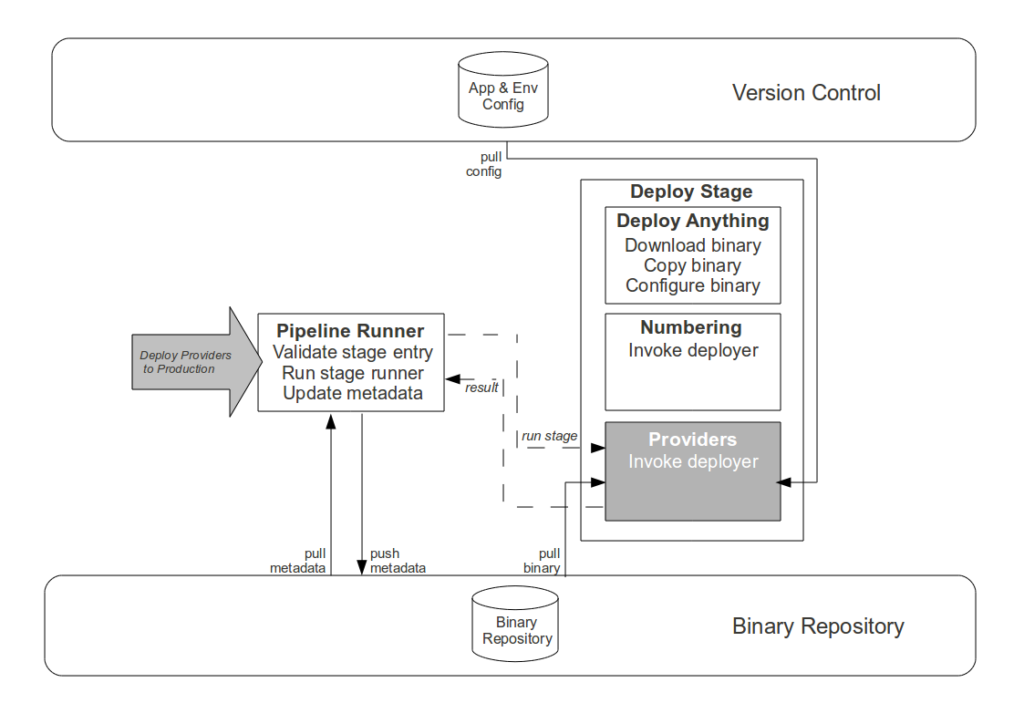

- Pipeline updates. Although the high frequency of Strangler Pipeline updates implies value in further automation of its own Production release process, a single pipeline update cannot improve cycle time and we wish to retain scheduling flexibility – as pipeline updates increase the probability of release failure, it would be unwise to release a new pipeline version immediately prior to a Production platform release. Instead a Production request is submitted for each signed off pipeline artifact, and while the majority are immediately released the Operations team reserve the right to delay if their calendar warns of a pending Production platform release.

Autonomation emphasises the role of root cause analysis, and after every major release failure we hold a session to identify the root cause of the problem, the lessons learned, and the necessary counter-measures to permanently solve the problem. At the time of writing our analysis shows that 13% of release failures were caused by pipeline defects, 10% by misconfiguration of TeamCity Deployment Builds, and the majority originated in our siloed organisational structure. This data provides an opportunity to measure our adoption of the principles of Continuous Delivery according to Shuhari:

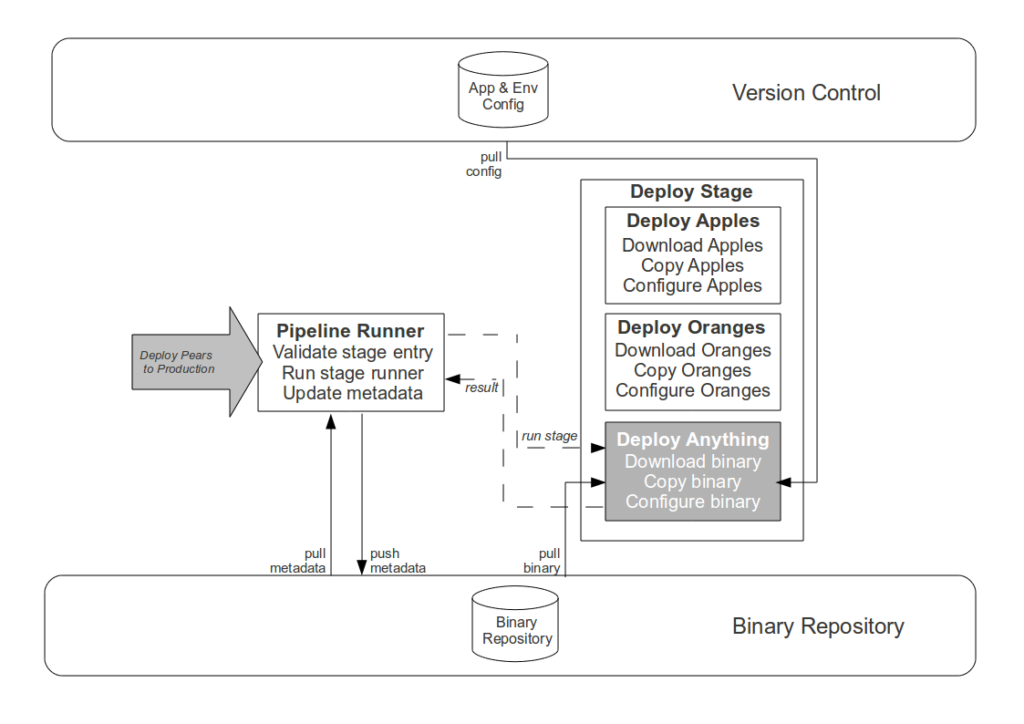

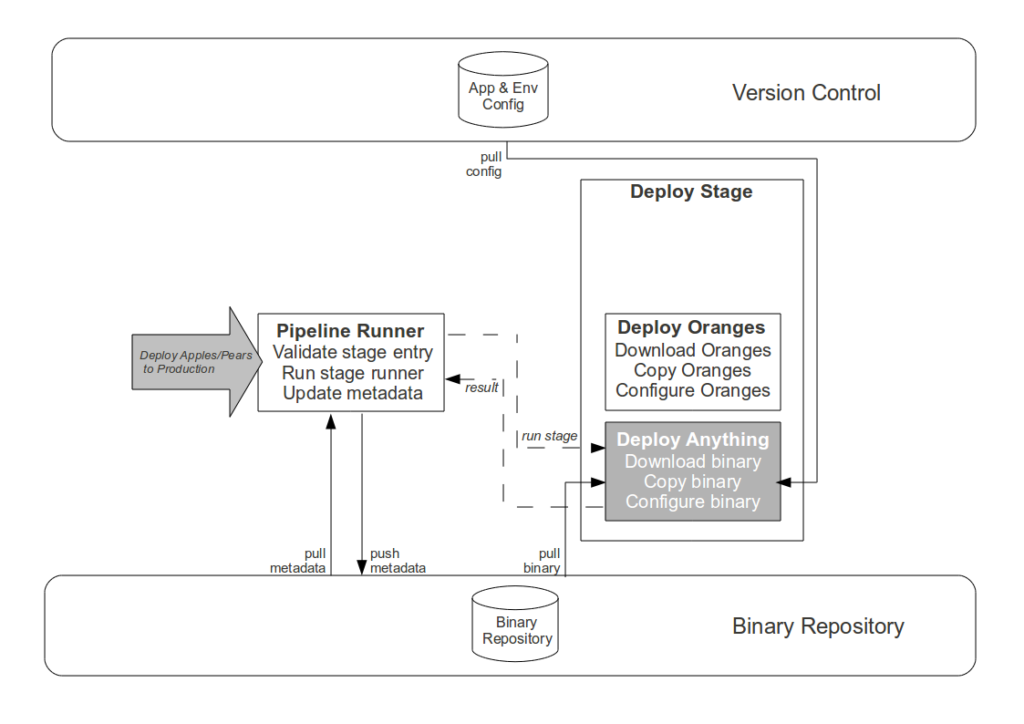

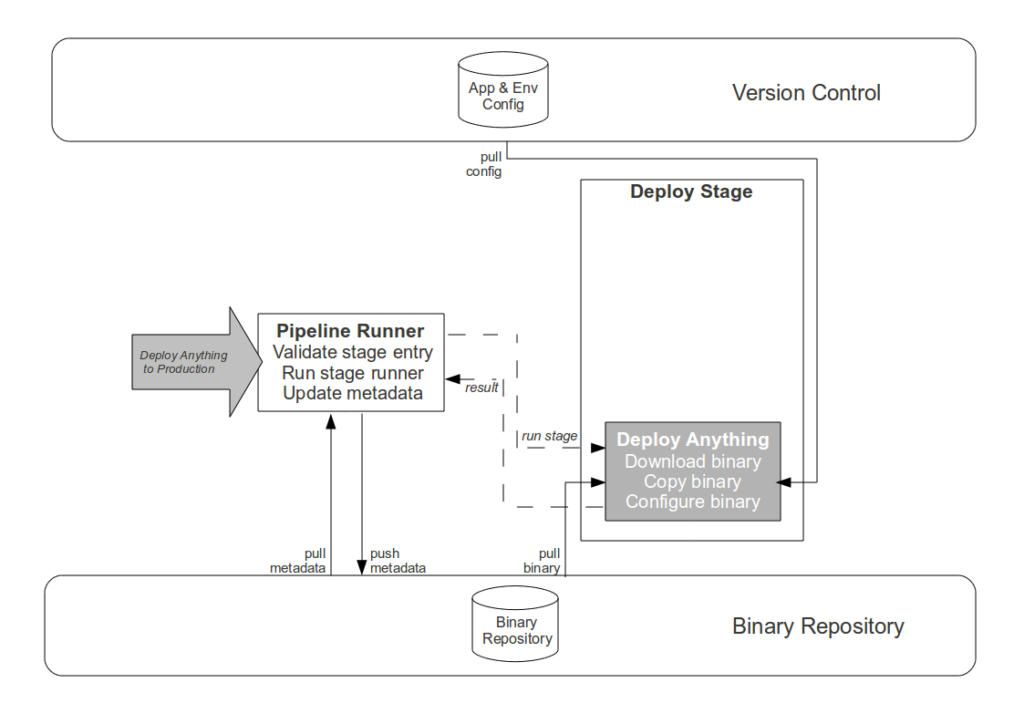

- shu – By scaling our automated release mechanism to manage greenfield and legacy application platforms, we have implemented Repeatable Reliable Process, Automate Almost Everything, and Keep Everything In Version Control

- ha – By introducing combinational static analysis tests and a pipeline user interface to reduce our defect rate and TeamCity usability issues, we have matured to Bring The Pain Forward and Build Quality In

- ri – Sky Network Services is a Waterscrumfall organisation where Business, Development, and Operations work concurrently on different projects with different priorities, which means we sometimes fall foul of Conway’s Law and compete over constrained resources to the detriment of cycle time. We have yet to achieve Done Means Released, Everybody Is Responsible, and Continuous Improvement

An example of our organisational structure impeding cycle time would be the first release of the new Messaging application 186-13, which resulted in the following value stream audit:

While each pipeline operation was successful in less than 20 seconds, the disparity between Commit start time and Production finish time indicate significant delivery problems. Substantial wait times between environments contributed to a lead time of 63 days, far in excess of our average lead time of 6 days. Our analysis showed that Development started work on Messaging 186-13 before Operations ordered the necessary server hardware, and as a result hardware lead times restricted environment availability at every stage. No individual or team was at fault for this situation – the fault lay in the system, with Development and Operations working upon different business projects at the time with non-aligned goals.

With the majority of the Sky Network Services application estate now managed by the Strangler Pipeline it seems timely to reflect upon our goal of retaining our original cycle time of 26 days. Our data suggests that we have been successful, with the cycle time of our Landline Fulfilment and Network Management platforms now 25 days and our greenfield platforms between 18 and 21 days. However, examples such as Messaging 186-13 remind us that cycle time cannot be improved by automation alone, and we must now redouble our efforts to implement Done Means Released, Everybody Is Responsible, and Continuous Improvement. By building the Strangler Pipeline we have followed Donella Meadows‘ change management advice to “reduce the probability of destructive behaviours and to encourage the possibility of beneficial ones” and given all we have achieved I am confident that we can Continuously Improve together.