Ali Asad Lotia and I have published an article on the Equal Experts website, describing what you should and (probably) shouldn’t try from SRE…

Author: Steve Smith (Page 3 of 11)

“I’m often asked by senior leaders in different organisations how they should measure software delivery, and kickstart a Continuous Delivery culture. Accelerate and Measuring Continuous Delivery have some of the answers, but not all of them.

This for anyone wondering how to effectively measure Product, Delivery, and Operations teams as one, in their own organisation…”

Steve Smith

TL;DR:

- Teams working in an IT cost centre are often judged on vanity measures such as story points and incident count.

- Teams need to be measured on outcomes linked to business goals, deployment throughput, and availability.

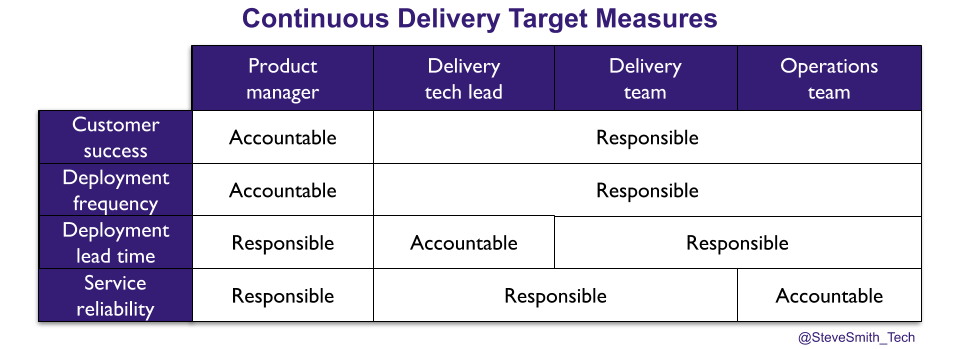

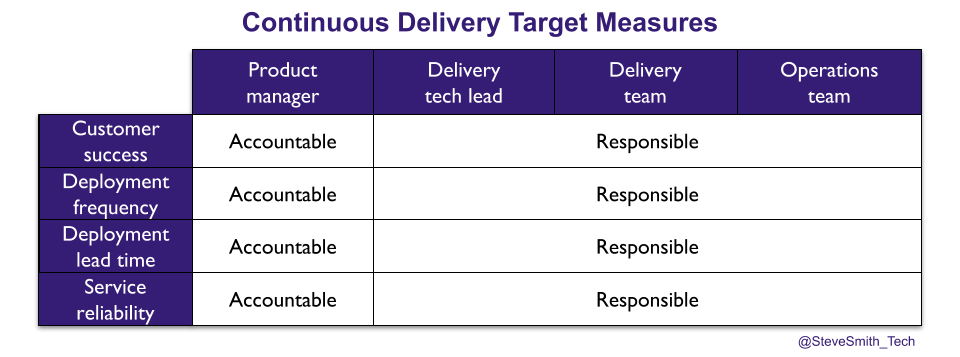

- A product manager, not a delivery lead or tech lead, must be accountable for all target measures linked to a product and the teams working on it.

Introduction

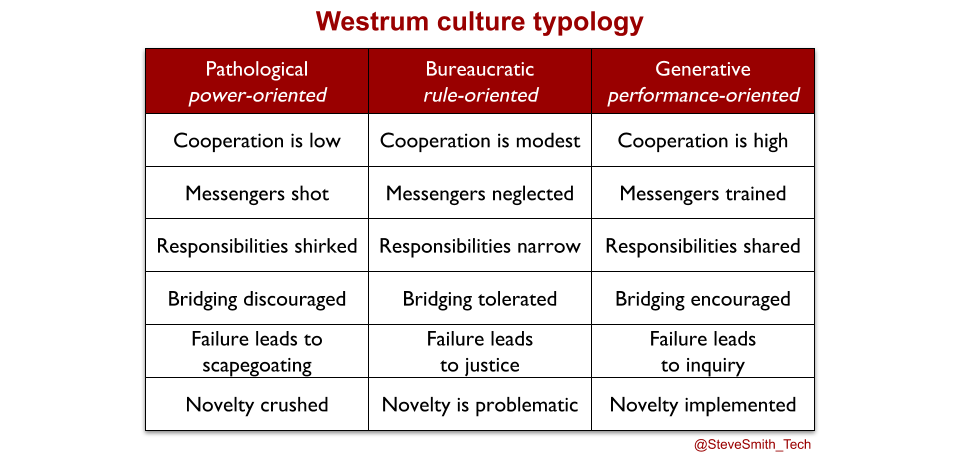

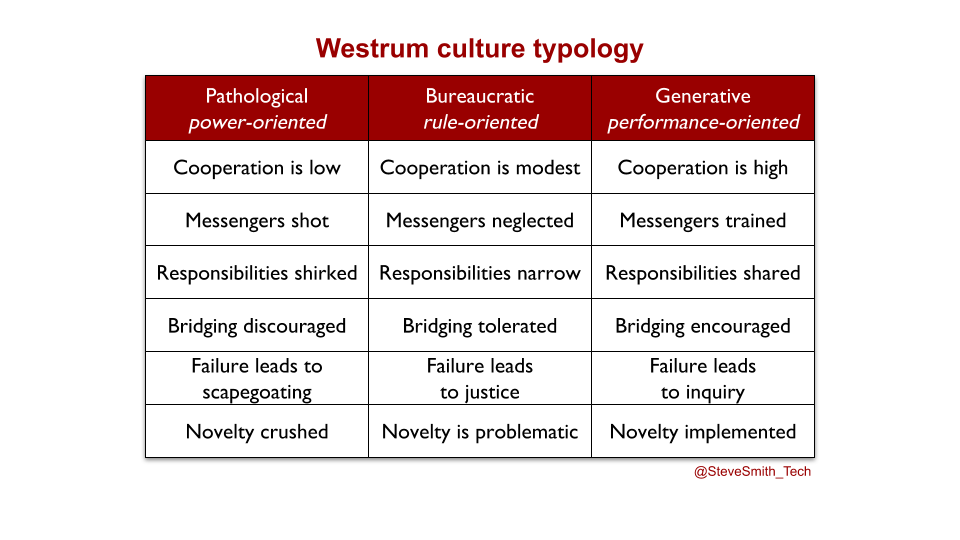

In A Typology of Organisational Cultures, Ron Westrum defines culture as power, rule, or performance-oriented. An organisation in a state of Discontinuous Delivery has a power or rule-oriented culture, in which bridging between teams is discouraged or barely tolerated.

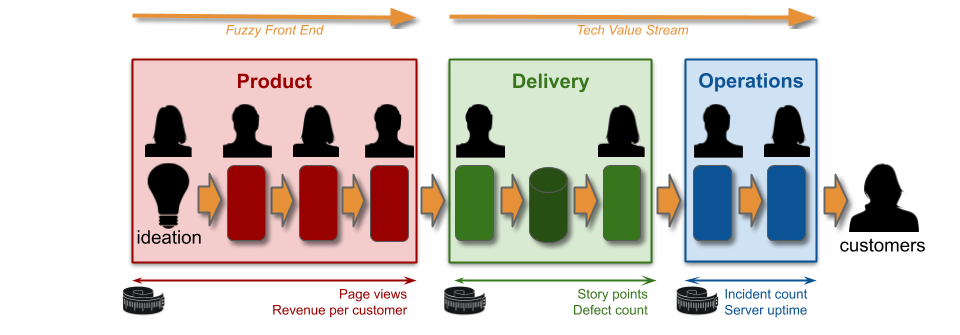

A majority of organisations mired in Discontinuous Delivery have IT as a Cost Centre. Their value streams crosscut siloed organisational functions in Product, Delivery, and Operations. Each silo has its own target measures, which reinforce a power or rule-oriented culture. Examples include page views and revenue per customer in Product, story points and defect counts in Delivery, and incident counts and server uptime in Operations.

These are vanity measures. A vanity measure in a value stream is an output of one or a few siloed activities, in a single organisational function. As a target, it is vulnerable to individual bias, under-reporting, and over-reporting by people within that silo, due to Goodhart’s Law. Vanity measures have an inherently low information value, and incentivise people in different silos to work at cross-purposes.

Measure outcomes, not outputs

Measuring Continuous Delivery by the author describes how an organisation can transition from Discontinuous Delivery to Continuous Delivery, and dramatically improve service throughput and reliability. A performance-oriented culture needs to be introduced, in which bridging between teams is encouraged.

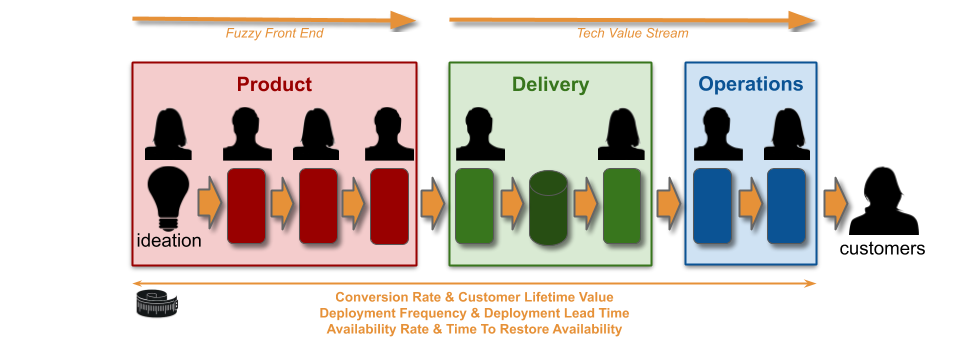

The first step in that process is to replace vanity measures with actionable measures. An actionable measure in a value stream is a holistic outcome for all activities. It has a high information value. It has some protection against individual bias, under-reporting, and over-reporting, because it is spread across all organisational functions.

Actionable measures for customer success could include conversion rate, and customer lifetime value. Accelerate by Dr Nicole Forsgren et al details the actionable measures for service throughput:

- Deployment frequency. The time between production deployments.

- Deployment lead time. The time between a mainline code commit and its deployment.

Accelerate does not extend to service reliability. The actionable measures of service reliability are availability rate, and time to restore availability.

Target measures for service throughput and reliability need to be set for services at the start of a Continuous Delivery programme. They increase information flow, cooperation, and trust between people, teams, and organisational functions within the same value stream. They make it clear the product manager, delivery team, and operations team working on the same service share a responsibility for its success. It is less obvious who is accountable for choosing the target measures, and ensuring they are met.

Avoid delivery tech lead accountability

One way to approach target accountability is for a product manager to be accountable for a deployment frequency target, and a delivery tech lead accountable for a deployment lead time target. This is based on deployment lead time correlating with technical quality, and its reduction depending on delivery team ownership of the release process.

It is true that Continuous Delivery is predicated on a delivery team gradually assuming sole ownership of the release process, and fully automating it as a deployment pipeline. However, the argument for delivery tech lead accountability is flawed, due to:

- Process co-ownership. The release process is co-owned by delivery and operations teams at the outset, while it is manual or semi-automated. A delivery tech lead cannot be accountable for a team in another organisational function.

- Limited influence. An operations team is unlikely to be persuaded by a delivery tech lead that release activities move to a delivery team for automation. A delivery tech lead is not influential in other organisational functions.

- Prioritisation conflict. The product manager and delivery tech lead have separate priorities, for product features and deployment lead time experiments. A delivery tech lead cannot compete with a product manager prioritising product features on their own.

- Siloed accountabilities. A split in throughput target accountability perpetuates the Product and IT divide. A delivery tech lead cannot force a product manager to be invested in deployment lead time.

Maximise product manager accountability

In a power or rule-oriented culture, driving change across organisational functions requires significant influence. The most influential person across Product, Delivery, and Operations for a service will be its budget holder, and that is the product manager. They will be viewed as the sponsor for any improvement efforts related to the service. Their approval will lend credibility to changes, such as a delivery team owning and automating the release process.

Product managers should be accountable for all service throughput and reliability targets. It will encourage them to buy into Continuous Delivery as the means to achieve their product goals. It will incentivise them to prioritise deployment lead time experiments and operational features alongside product features. It will spur them to promote change across organisational functions.

In The Decision Maker, Dennis Bakke advocates effective decision making as a leader choosing a decision maker, and the decision maker gathering information before making a decision. A product manager does not have to choose the targets themselves. If they wish, they can nominate their delivery tech lead to gather feedback from different people, and then make a decision for which the product manager is accountable. The delivery and operations teams are then responsible for delivering the service, with sufficient technical discipline and engineering skills to achieve those targets.

A product manager may be uncomfortable with accountability for IT activities. The multi-year research data in Accelerate is clear of the business benefits for a faster deployment lead time:

- Less rework. The ability to quickly find defects in automated tests tightens up feedback cycles, which reduces rework and accelerates feature development.

- More revenue protection. Using the same deployment pipeline to restore lost availability, or apply a security patch in minutes, limits revenue losses and reputational damage on failure.

- Customer demand potential. A deployment lead time that is a unit of time less than deployment frequency demonstrates an ability to satisfy more demand, if it can be unlocked.

Summary

Adopting Continuous Delivery is based on effective target measures of service throughput and reliability. Establishing the same targets across all the organisational functions in a value stream will start the process of nudging the organisational culture from power or rule-oriented to performance-oriented. The product manager should be accountable for all target measures, and the delivery and operations teams responsible for achieving them.

It will be hard. There will be authoritative voices in different organisational functions, with a predisposition to vanity measures. The Head of Product, Head of Delivery, and Head of Operations might have competing budget priorities, and might not agree on the same target measures. Despite these difficulties, it is vital the right target measures are put in place. As Peter Drucker said in The Essential Drucker, ‘if you want something new, you have to stop doing something old’.

Acknowledgements

Thanks to Charles Kubicek, Dave Farley, Phil Parker, and Thierry de Pauw for their feedback.

“In 2017, a Director of Ops asked me to turn their sysadmins into ‘SRE consultants’. I reminded them of their operability engineering team driving similar practices, and that I was their lead.

In 2018, a CTO at a gaming company told me SRE was better than DevOps, but recruitment was harder. They said they didn’t know much about SRE.

In 2020, I learned of a sysadmin team that were rebranded as an SRE team, received a small pay increase… and then carried on doing the same sysadmin work.

This is for decision makers who have been told SRE will solve their IT problems…”

Steve Smith

TL;DR:

- SRE as a Philosophy means the Site Reliability Engineering principles from Google, and is associated with a lot of valuable ideas and insights.

- SRE as a Cult refers to the marketing of SRE teams, SRE certifications as a panacea for technology problems.

- Some aspects of SRE as a Philosophy are far harder to apply to enterprise organisations than others, such as SRE teams and error budgets.

- Operability needs to be a key focus, not SRE as a Cargo Cult, and SRE as a Philosophy can supply solid ideas for improving operability.

Introduction

A successful Digital transformation is predicated on a transition from IT as a Cost Centre to IT as a Business Differentiator. An IT cost centre creates segregated Delivery and Operations teams, trapped in an endless conflict between feature speed and service reliability. Delivery wants to maximise deployments, to increase speed. Operations wants to minimise deployments, to increase reliability.

In Accelerate, Dr Nicole Forsgren et al confirm this produces low performance IT, and has negative consequences for profitability, market share, and productivity. Accelerate also demonstrates speed and reliability are not a zero sum game. Investing in both feature speed and service reliability will produce a high performance IT capability that can uncover new product revenue streams.

SRE as a Philosophy

In 2004, Ben Treynor Sloss started an initiative called SRE within Google. He later described SRE as a software engineering approach to IT operations, with developers automating work historically owned outside Google by sysadmins. SRE was disseminated in 2016 by the seminal book Site Reliability Engineering, by Betsey Byers et al. Key concepts include:

- Availability levels.

- Service Level Objectives.

- Error budgets.

- You Build It SRE Run It.

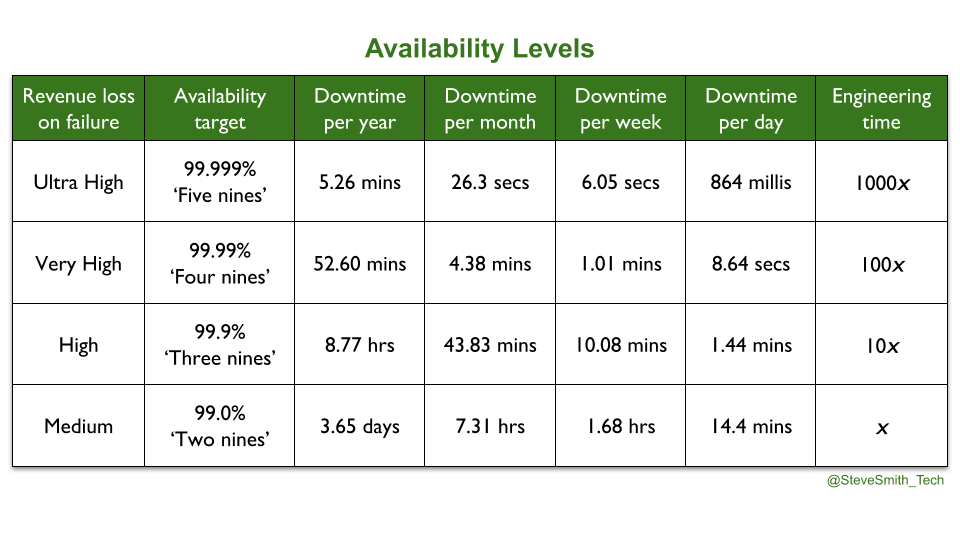

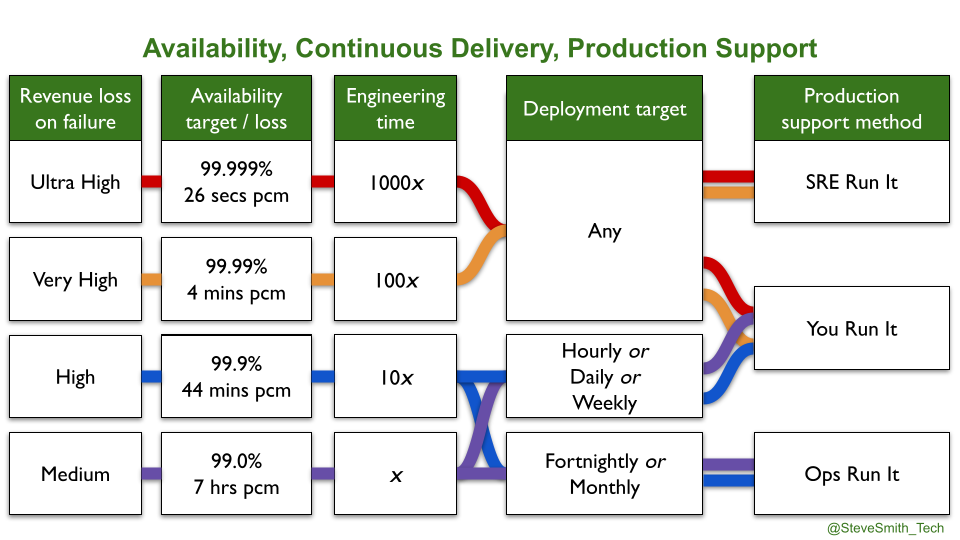

Availability levels are known by the nines of availability. 99.0% is two nines, 99.999% is five nines. 100% availability is unachievable, as less reliable user devices will limit the user experience. 100% is also undesirable, as maximising availability limits speed of feature delivery and increases operational costs. Site Reliability Engineering contains the astute observation that ‘an additional nine of reliability requires an order of magnitude improvement’. At any availability level, an amount of unplanned downtime needs to be tolerated, in order to invest in feature delivery.

A Service Level Objective (SLO) is a published target range of measurements, which sets user expectations on an aspect of service performance. A product manager chooses SLOs, based on their own risk tolerance. They have to balance the engineering cost of meeting an SLO with user needs, the revenue potential of the service, and competitor offerings. An availability SLO could be a median request success rate of 99.9% in 24 hours.

An error budget is a quarterly amount of tolerable, unplanned downtime for a service. It is used to mitigate any inter-team conflicts between product teams and SRE teams, as found in You Build It Ops Run It. It is calculated as 100% minus the chosen nines of availability. For example, an availability level of 99.9% equates to an error budget of 0.01% unsuccessful requests. 0.002% of failing requests in a week would consume 20% of the error budget, and leave 80% for the quarter.

You Build It SRE Run It is a conditional production support method, where a team of SREs support a service for a product team. All product teams do You Build It You Run It by default, and there are strict entry and exit criteria for an SRE team. A service must have a critical level of user traffic, some elevated SLOs, and pass a readiness review. The SREs will take over on-call, and ensure SLOs are consistently met. The product team can launch new features if the service is within its error budget. If not, they cannot deploy until any errors are resolved. If the error budget is repeatedly blown, the SRE team can hand on-call back to the product team, who revert to You Build It You Run It.

This is SRE as a Philosophy. The biggest gift from SRE is a framework for quantifying availability targets and engineering effort, based on product revenue. SRE has also promoted ideas such as measuring partial availability, monitoring the golden signals of a service, building SLO alerts and SLI dashboards from the same telemetry data, and reducing operational toil where possible.

SRE as a Cult

In the 2010s, the DevOps philosophy of collaboration was bastardised by the ubiquitous DevOps as a Cult. Its beliefs are:

- The divide between Delivery and Operations teams is always the constraint in IT performance.

- DevOps automation tools, DevOps engineers, DevOps teams, and/or DevOps certifications are always solutions to that problem.

In a similar vein, the SRE philosophy has been corrupted by SRE as a Cult. The SRE cargo cult is based on the same flawed premise, and espouses SRE error budgets, SRE engineers, SRE teams, and SRE certifications as a panacea. Examples include Patrick Hill stating in Love DevOps? Wait until you meet SRE that ‘SRE removes the conjecture and debate over what can be launched and when’, and the DevOps Institute offering SRE certification.

SRE as a Cult ignores the central question facing the SRE philosophy – its applicability to IT as a Cost Centre. SRE originated from talented, opinionated software engineers inside Google, where IT as a Business Differentiator is a core tenet. Using A Typology of Organisational Cultures by Ron Westrum, the Google culture can be described as generative. Accelerate confirms this is predictive of high performance IT, and less employee burnout.

There are fundamental challenges with applying SRE to an IT as a Cost Centre organisation with a bureaucratic or pathological culture. Product, Delivery, and Operations teams will be hindered by orthogonal incentives, funding pressures, and silo rivalries.

For availability levels, if failure leads to scapegoating or justice:

- Heads of Product/Delivery/Operations might not agree 100% reliability is unachievable.

- Heads of Product/Delivery/Operations might not accept an additional nine of reliability means an order of magnitude more engineering effort.

- Heads of Delivery/Operations might not consent to availability levels being owned by product managers.

For Service Level Objectives, if responsibilities are shirked or discouraged:

- Product managers might decline to take on responsibility for service availability.

- Product managers will need help from Delivery teams to uncover user expectations, calculate service revenue potential, and check competitor availability levels.

- Sysadmins might object to developers wiring automated, fine-grained measurements into their own production alerts.

For error budgets, if cooperation is modest or low:

- Product manager/developers/sysadmins might disagree on availability levels and the maths behind error budgets.

- Heads of Product/Development might not accept a block on deployments when an error budget is 0%.

- A Head of Operations might not accept deployments at all hours when an error budget is above 0%.

- Product managers/developers might accuse sysadmins of blocking deployments unnecessarily

- Sysadmins might accuse product managers/developers of jeopardising reliability

- A Head of Operations might arbitrarily block production deployments

- A Head of Development might escalate a block on production deployments

- A Head of Product might override a block on production deployments

For You Build It SRE Run It, if bridging is merely tolerated or discouraged:

- A Head of Operations might not consent to on-call Delivery teams on their opex budget

- A Head of Development might not consent to on-call Delivery teams on their capex budget

- A Head of Operations might be unable to afford months of software engineering training for their sysadmins on an opex budget

- Sysadmins might not want to undergo training, or be rebadged as SREs

- Developers might not want to do on-call for their services, or be rebadged as SREs

- Delivery teams will find it hard to collaborate with an Operations SRE team on errors and incident management

- A Head of Operations might be unable to transfer an unreliable service back to the original Delivery team, if it was disbanded when its capex funding ended

In Site Reliability Engineering, Ben Treynor Sloss identifies SRE recruitment as a significant challenge for Google. Developers are needed that excel in both software engineering and systems administration, which is rare. He counters this by arguing an SRE team is cheaper than an Operations team, as headcount is reduced by task automation. Recruitment challenges will be exacerbated by smaller budgets in IT as a Cost Centre organisations. The touted headcount benefit is absurd, as salary rates are invariably higher for developers than sysadmins.

Aim for Operability, not SRE as a Cult

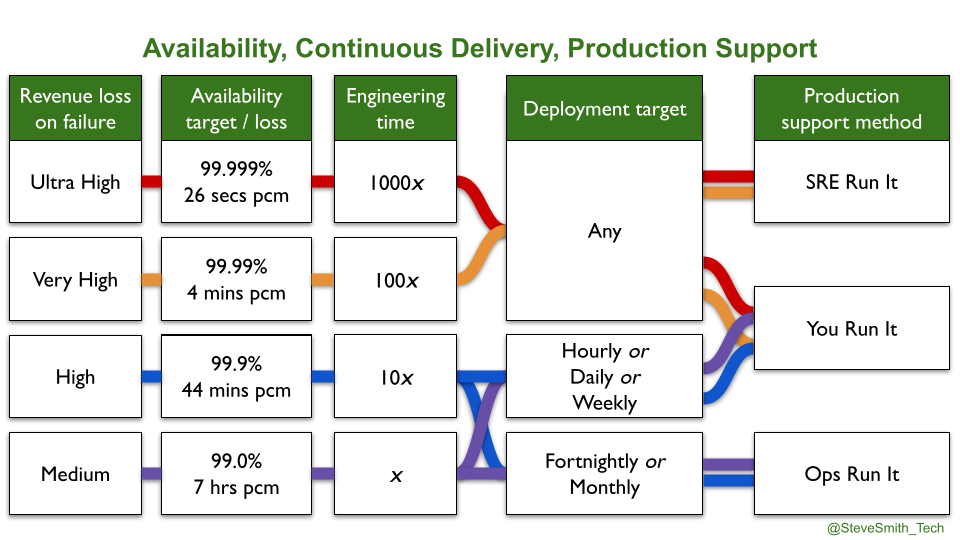

High performance IT requires Continuous Delivery and Operability. Operability refers to the ease of safely and reliably operating production systems. Increasing service operability will improve reliability, reduce operational rework, and increase feature speed. Operability practices include prioritising operational requirements, automated infrastructure, deployment health checks, pervasive telemetry, failure injection, incident swarming, learning from incidents, and You Build It You Run It.

These practices can be implemented with, and without SRE. In addition, some SRE concepts such as availability levels and Service Level Objectives can be implemented independently of SRE. In particular, product managers being responsible for calculating availability levels based on their risk tolerances is often a major step forward from the status quo.

SRE as a Cult obscures important questions about SRE applicability to SMEs and enterprise organisations. You Build It SRE Run It is a difficult fit for an IT as a Cost Centre organisation, and is not cost effective at all availability levels. The amount of investment required in employee training, organisational change, and task automation to run an SRE team alongside You Build It You Run It teams is an order of magnitude more than You Build It You Run It itself. It is only warranted when multiple services exist with critical user traffic, and at an availability level of four nines or more.

An IT as a Cost Centre organisation would do well to implement You Build It You Run It instead. It unlocks daily deployments, by eliminating handoffs between Delivery and Operations teams. It minimises incident resolution times, via single-level swarming support prioritised ahead of feature development. Furthermore, it maximises incentives for developers to focus on operational features, as they are on-call out of hours themselves. It is a cost effective method of revenue protection, from two nines to five nines of availability.

In some cases, an SME or enterprise organisation will earn tens of millions in product revenues each day, its reliability needs will be extreme, and investing in SRE as a Philosophy could be warranted. Otherwise, heed the perils of SRE as a Cult. As Luke Stone said in Seeking SRE, ‘in the long run, SRE is not going to thrive in your organisation based purely on its current popularity’.

Acknowledgements

Thanks to Adam Hansrod, Dave Farley, Denise Yu, John Allspaw, Spike Lindsey, and Thierry de Pauw for their feedback.

How does Site Reliability Engineering (SRE) approach production support? Why is it conditional, and how do error budgets try to avoid the inter-team conflicts of You Build It Ops Run It?

This is part of the Who Runs It series.

Introduction

The usual alternative to the You Build It Ops Run It production support method is You Build It You Run It. This means a development team is responsible for supporting its own services in production. It eliminates handoffs between developers and sysadmins, and maximises operability incentives for developers. It has the ability to unlock daily deployments, and improve production reliability.

A less common alternative to You Build It Ops Run It is a Site Reliability Engineering (SRE) on-call team. This can be referred to as You Build It SRE Run It. It is a conditional production support method, with an operations-focussed development team supporting critical services owned by other development teams.

SRE is a software engineering approach to IT operations. It started at Google in 2004, and was popularised by Betsey Byers et al in the 2016 book Site Reliability Engineering. In The SRE model, Jaana Dogan states ‘what makes Google SRE significantly different is not just their world-class expertise, but the fact that they are optional’. An SRE on-call team has strict entry and exit criteria for services. The process is:

- A development team does You Build It You Run It by default. Their service has a quarterly error budget.

- If user traffic becomes substantial, the development team requests SRE on-call assistance. Their service must pass a readiness review.

- If the review is successful, the development team shares the on-call rota with some SREs.

- If user traffic becomes critical, the development team hands over the on-call rota to a team of SREs.

- The SRE team automates operational tasks to improve service availability, latency, and performance. They monitor the service, and respond to any incidents.

- If the service is inside its error budget, the development team can launch new features without involving the SRE team.

- If the service is outside its error budget, the development team cannot launch new features until the SRE team is satisfied all errors are resolved.

- If the service is consistently outside its error budget, the SRE team hands the on-call rota back to the development team. The service reverts to You Build It You Run It.

In a startup with IT as a Business Differentiator, an SRE on-call team is a product team like any other development team. Those development teams might support their own services, or rely on the SRE on-call team.

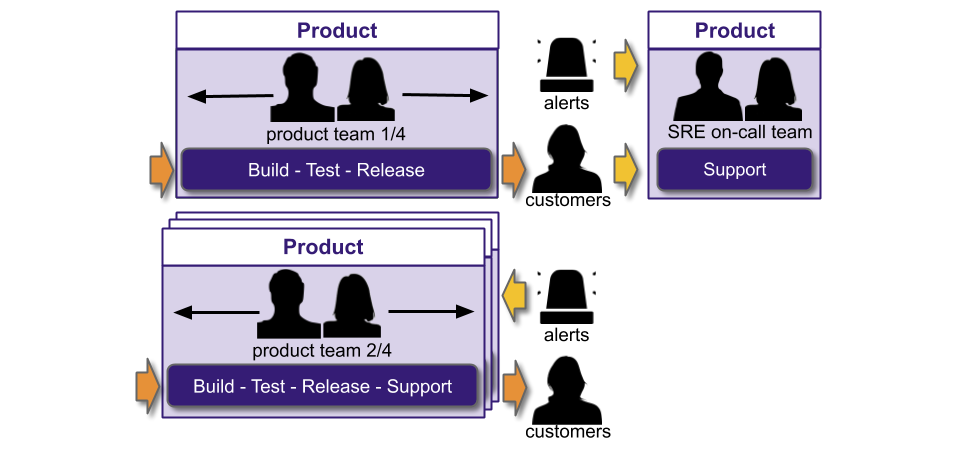

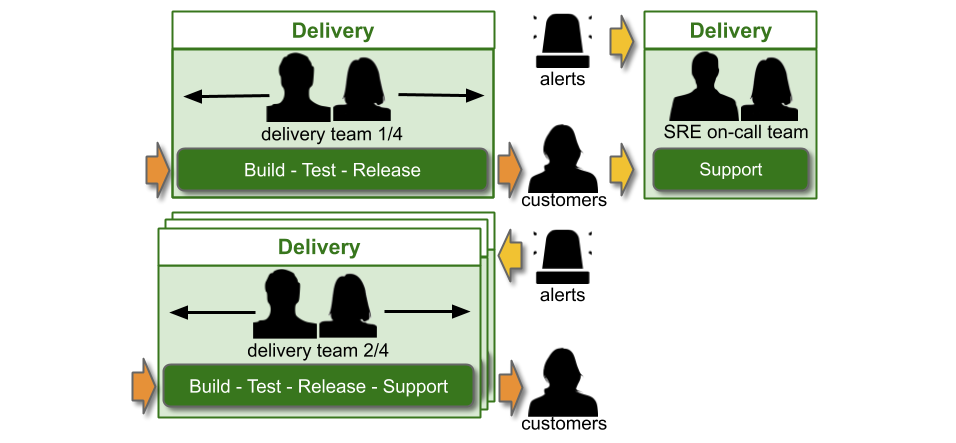

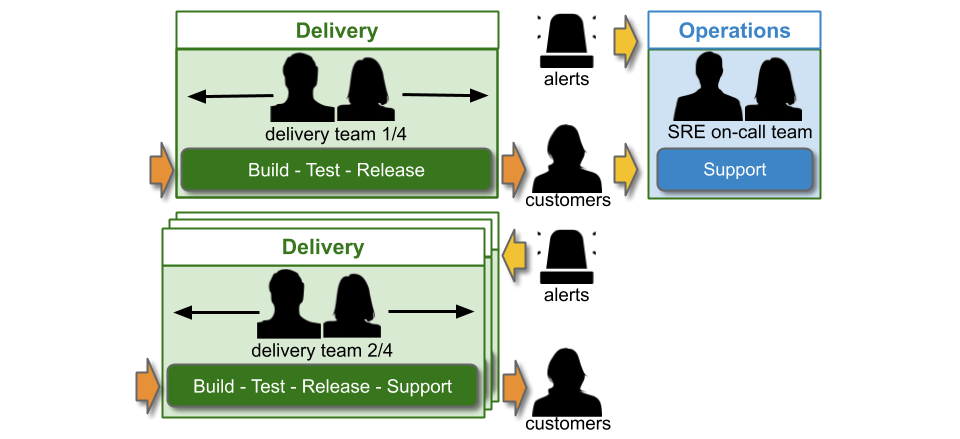

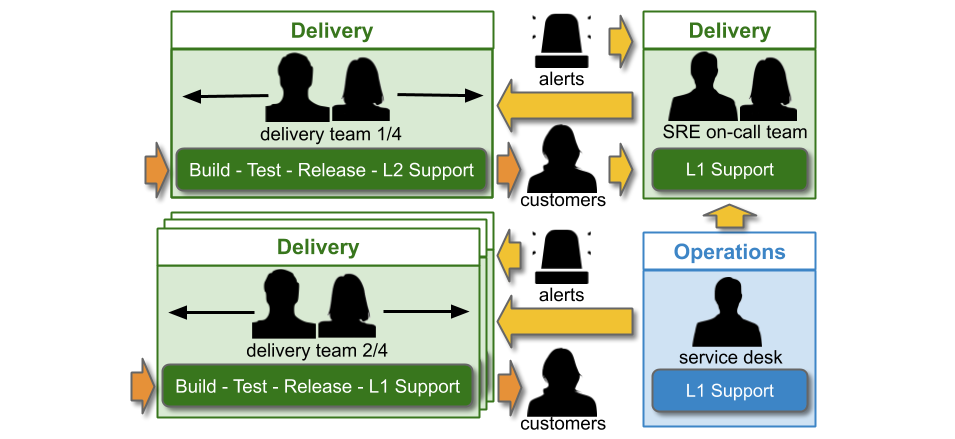

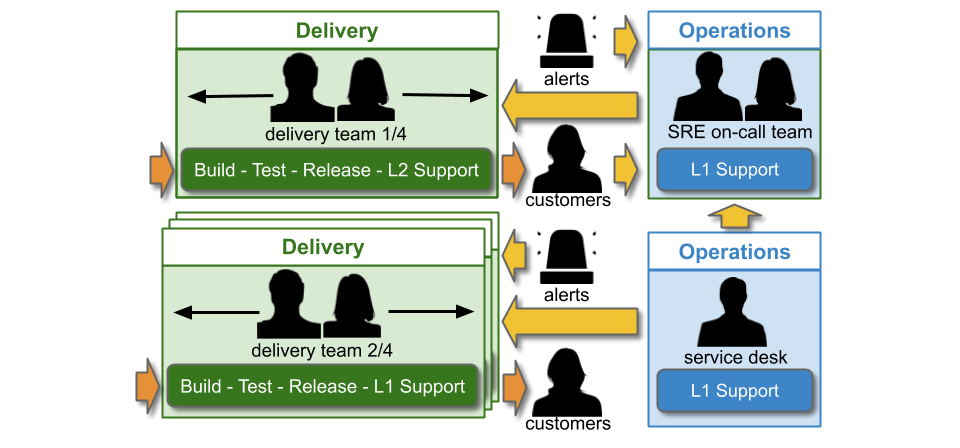

In an SME or enterprise organisation with IT as a Cost Centre, You Build It SRE Run It is very different. There are segregated Delivery and Operations functions, due to COBIT and Plan-Build-Run. The SRE on-call team could be within the Delivery function, and report into the Head of Delivery.

Alternatively, the SRE on-call team could be within the Operations function, and report into the Head of Operations.

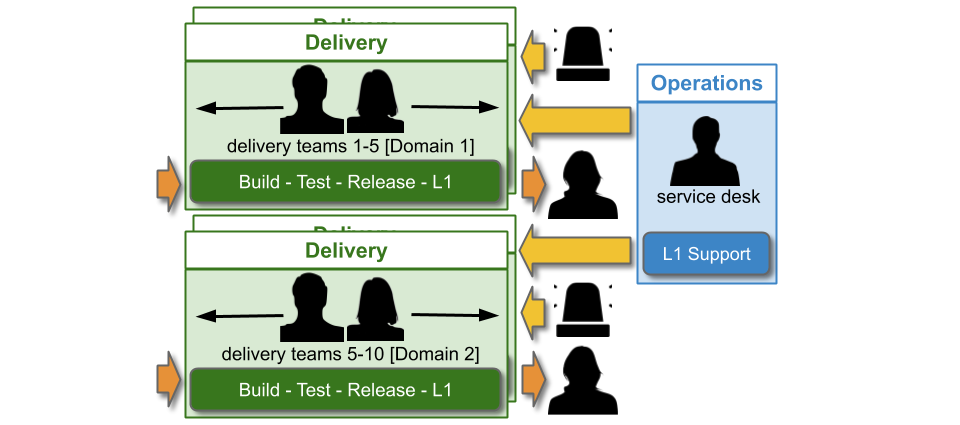

In IT as a Cost Centre, You Build It SRE Run It consists of single-level and multi-level support. An SRE on-call team participates in multiple support levels, with the Delivery teams that rely on them. A Delivery team supporting its own service has single level swarming.

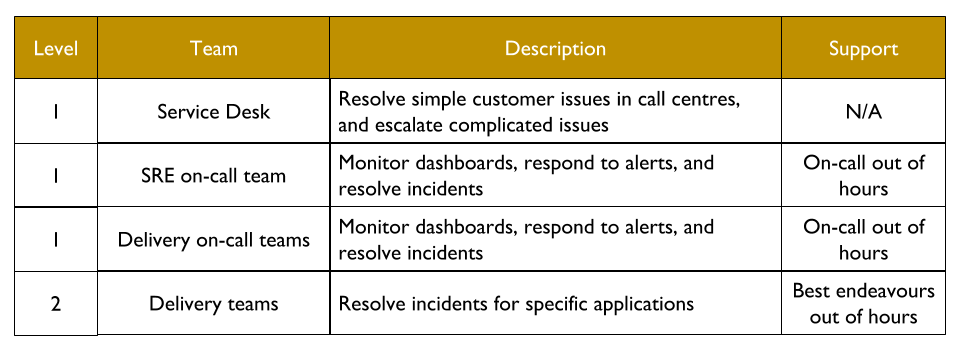

The Service Desk handles incoming customer requests. They can link a ticket in the incident management system to a specific web page or user journey, which reassigns the ticket to the correct on-call team. Delivery teams doing You Build It You Run It are L1 on-call for their own services. The SRE on-call team is L1 on-call for critical services, and when necessary they can escalate issues to the L2 Delivery teams building those services.

If the SRE on-call team is in Delivery, they will be funded by a capex Delivery budget. The Service Desk will be funded out of an Operations opex budget.

If the SRE on-call team is in Operations, they will be funded by an Operations opex budget like the Service Desk team.

Continuous Delivery and Operability

In You Build It SRE Run It, delivery teams on-call for their own production services experience the usual benefits of You Build It You Run It. Using an SRE on-call team and error budgets is a different way to prioritise service availability and incident resolution. Delivery teams reliant on an SRE on-call team are encouraged to limit their failure blast radius, to protect their error budget. The option for an SRE on-call team to hand back an on-call rota to a delivery team is a powerful reminder that operability needs a continual investment.

You Build It SRE Run It has these advantages for product development:

- Short deployment lead times. Lead times are minimised as there are no handoffs to the SRE on-call team.

- Focus on outcomes. Delivery teams are empowered to test product hypotheses and deliver outcomes.

- Short incident resolution times. Incident response from the SRE on-call team is rapid and effective.

- Adaptive architecture. Services will be architected for failure, including Circuit Breakers and Canary Deployments.

- Product telemetry. Delivery teams continually update dashboards and alerts for the SRE on-call team, according to the product context.

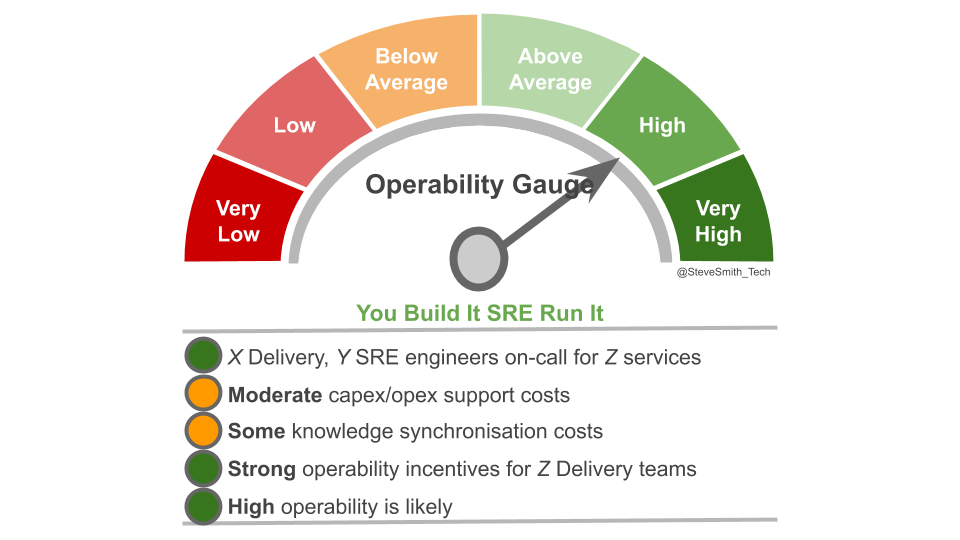

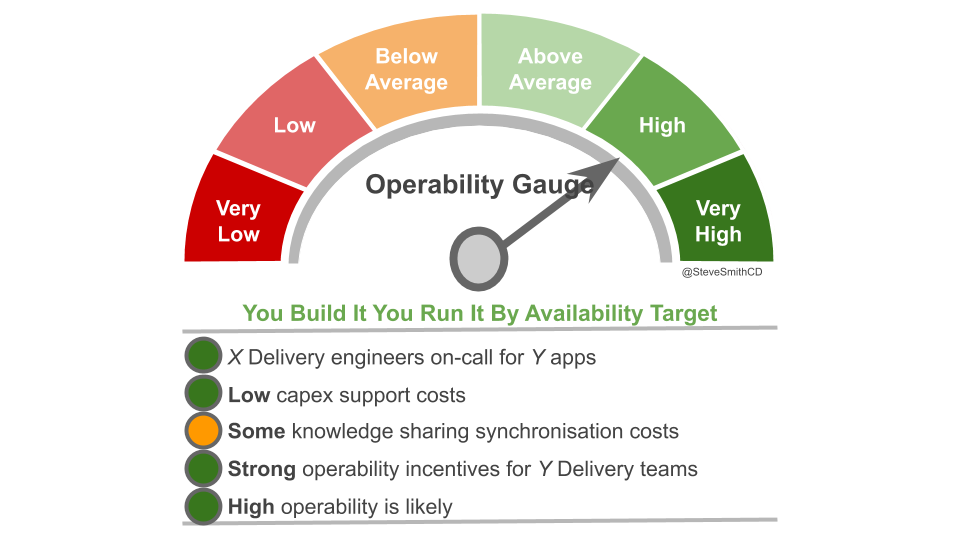

You Build It SRE Run It creates strong incentives for operability. Delivery teams on-call for their own services will have the maximum incentives to balance operational features with product features. There is 1 on-call engineer per team, at a low capex cost with no knowledge synchronisation costs between teams.

Delivery teams collaborating with an SRE on-call team do not have maximum operability incentives, as another team supports critical services with high levels of user traffic on their behalf. Theoretically, strong incentives remain due to error budgets. The ability of a delivery team to maintain a high deployment throughput without intervention depends on protecting service availability. This should ensure product managers prioritise operational features alongside product features. There is 1 on-call SRE for critical services at a capex or opex cost, and knowledge synchronisation costs between teams are inevitable.

Overinvesting in inapplicability

Production support is revenue insurance. At first glance, it might make sense to pay a premium for a high-powered SRE team to support highly available services with critical levels of user traffic. However, investing in an SRE on-call team should be questioned when its applicability to IT as a Cost Centre is so challenging.

Funding a SRE on-call team will be constrained by cost accounting. An SRE team in Delivery will have a capex budget, and undergo periodic funding renewals. An SRE team in Operations will have an opex budget, and endure regular pressure to find cost efficiencies. Either approach is at odds with a long term commitment to a large team of highly paid software engineers.

Error budgets are unlikely to magically solve the politics and bureaucracy that exists between Delivery teams and an SRE on-call team. Product managers, developers, and/or sysadmins might not agree on a service availability level, availability losses in recent incidents, and/or the remaining latitude in an error budget. A Head of Product might not accept an SRE block on deployments, when an error budget is lost. A Head of Delivery or Operations might not accept deployments at all hours, even with an error budget in place. In addition, an SRE on-call team might be unable to hand over an on-call rotation back to a Delivery team, if it was disbanded when its capex funding ended.

In Site Reliability Engineering, Betsey Byers et al describe near-universally applicable SRE practices, such as revenue-based availability targets and service level objectives. The authors also make the astute observation ‘an additional nine of reliability requires an order of magnitude improvement. A 99.99% service requires 10x more engineering effort than 99.9%, and 100x more than 99.0%. You Build It SRE Run It is not easily applied to IT as a Cost Centre, and it requires a sizable investment in culture, people, process, and tools. It is best suited to organisations with a website that genuinely requires 99.99% availability, and the maximum revenue loss in a large-scale failure could jeopardise the organisation itself. In a majority of scenarios, You Build It You Run It will be a simpler and more cost effective alternative.

Acknowledgements

Thanks to Thierry de Pauw.

The Who Runs It series:

- You Build It Ops Run It

- You Build It You Run It

- You Build It Ops Run It at scale

- You Build It You Run It at scale

- You Build It Ops Sometimes Run It

- Implementing You Build It You Run It at scale

- You Build It SRE Run It

Acknowledgements

Thanks to Thierry de Pauw.

Why is a hybrid of You Build It Ops Run It and You Build It You Run It doomed to fail at scale?

This is part of the Who Runs It series.

Introduction

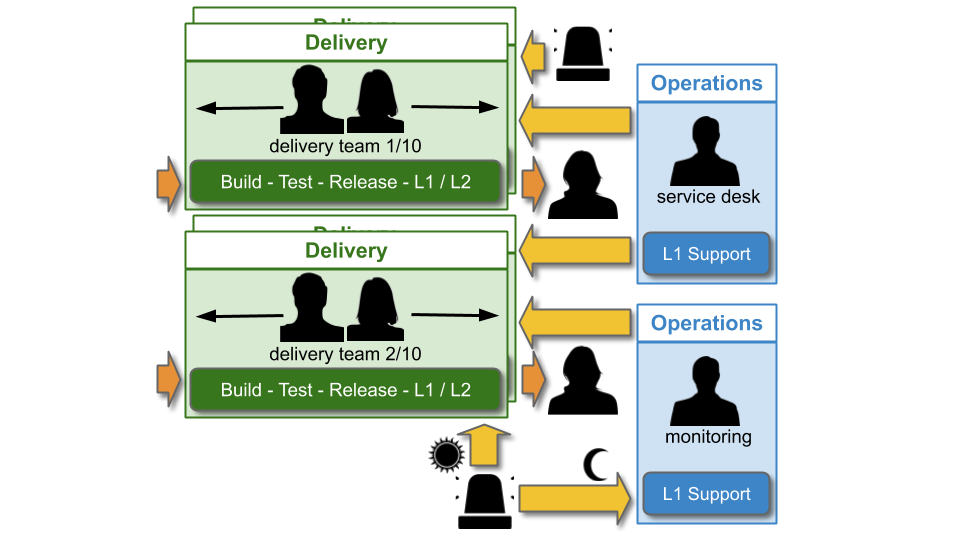

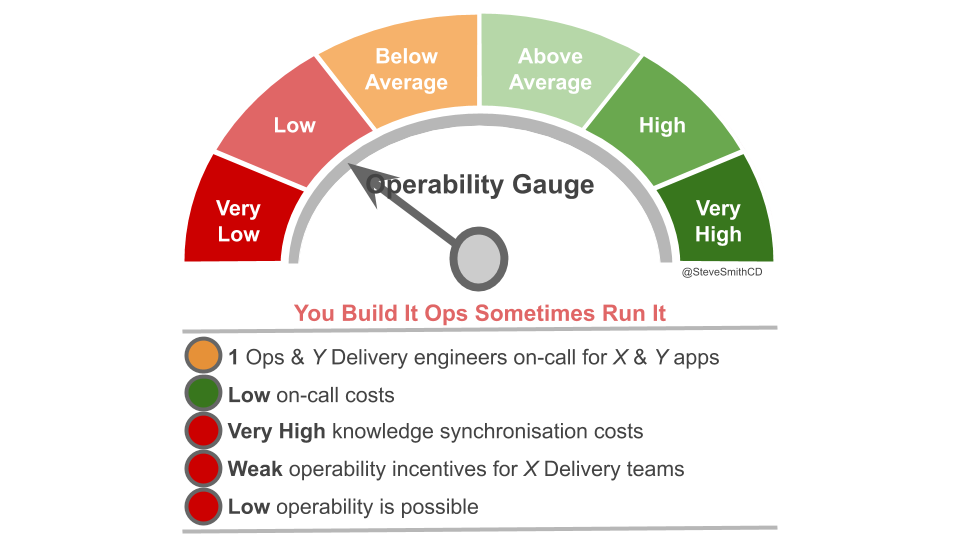

You Build It Ops Sometimes Run It refers to a mix of You Build It You Run It and You Build It Ops Run It. A minority of applications are supported by Delivery teams, whereas the majority are supported by a Monitoring team in Operations. This can be accomplished by splitting applications into higher and lower availability targets.

Some vendors may erroneously refer to this as Site Reliability Engineering (SRE). SRE refers to a central, on-call Delivery team supporting high availability, stable applications that meet stringent entry criteria. You Build It Ops Sometimes Run It is completely different to SRE, as the Monitoring team is disempowered and supports lower availability applications . The role and responsibilities of the Monitoring team are simply a hybrid of the Operations Bridge and Application Operations teams in the ITIL v3 Service Operation standard.

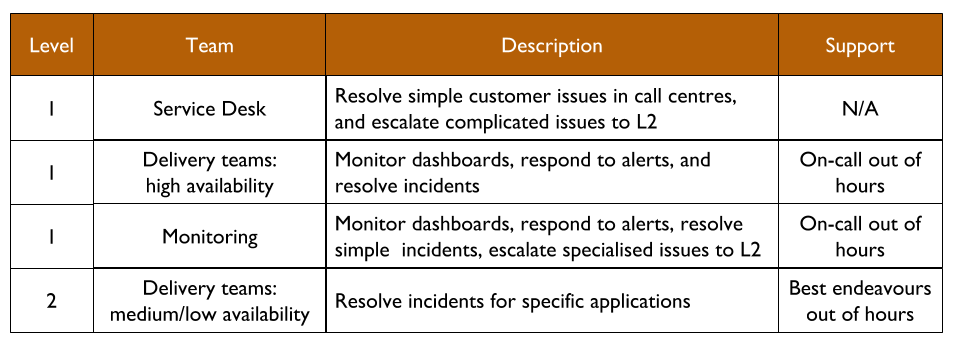

Out of hours, higher availability applications are supported by their L1 Delivery teams. Lower availability applications are supported by the L1 Monitoring team, who will receive alerts and respond to incidents. When necessary, the Monitoring team will escalate to L2 Delivery team members on best endeavours.

Support costs for Monitoring will be paid out of OpEx. Support costs for L1 Delivery teams should be paid out of CapEx, to ensure product managers balance desired availability with on-call costs. An L1 Delivery team member will be paid a flat standby rate, and a per-incident callout rate. An L2 Delivery team member will do best endeavours unpaid, and might be compensated per-callout with time off in lieu.

Inherited Discontinuous Delivery and inoperability

Proponents of You Build It Ops Sometimes Run It will argue it is a low cost, wafer thin support team that simply follows runbooks to resolve straightforward incidents, and Delivery teams can be called out when necessary. However, many of the disadvantages of You Build It Ops Run It are inherited:

- Long time to restore – support ticket handoffs between Monitoring and Delivery teams will delay availability restoration during complex incidents

- Very high knowledge synchronisation costs – application and incident knowledge will not be shared between multiple Delivery teams and the Monitoring team without significant coordination costs, such as handover meetings

- Slow operational improvements – problems and workarounds identified by Monitoring will languish in Delivery backlogs for weeks or months, building up application complexity for future incidents

- No focus on outcomes – applications will be built as outputs only, with little regard for product hypotheses

- Fragile architecture – failure scenarios will not be designed into applications, increasing failure blast radius

- Inadequate telemetry – dashboards and alerts by the Monitoring team will only be able to include low-level operational metrics

- Traffic ignorance – challenges in live traffic management will be localised, and unable to inform application design decisions

- Restricted collaboration – joint incident response between Monitoring and Delivery teams will be hampered by different ways of working and tools

- Unfair on-call expectations – Delivery team members will be expected to be available out of hours without compensation for the inconvenience, and disruption to their lives

The Delivery teams on-call for high availability applications will have strong operability incentives. However, with a Monitoring team responsible for a majority of applications, most Delivery teams will be unaware of or uninvolved in incidents. Those Delivery teams will have little reason to prioritise operational features, and the Monitoring team will be powerless to do so. Widespread inoperability, and an increased vulnerability to production incidents is unavoidable.

The notion of a wafer thin Monitoring team is fundamentally naive. If an IT department has an entrenched culture of You Build It Ops Run It At Scale, there will be a predisposition towards Operations support. Delivery teams on-call for higher availability applications will be viewed as a mere exception to the rule. Over time, there will be a drift to the Monitoring team taking over office hours support, and then higher availability applications out of hours. At that point, the Monitoring team is just another Application Operations team, and all the disadvantages of You Build It Ops Run It At Scale are assured.

The Who Runs It series:

- You Build It Ops Run It

- You Build It You Run It

- You Build It Ops Run It at scale

- You Build It You Run It at scale

- You Build It Ops Sometimes Run It

- Implementing You Build It You Run It at scale

- You Build It SRE Run It

Acknowledgements

Thanks to Thierry de Pauw.

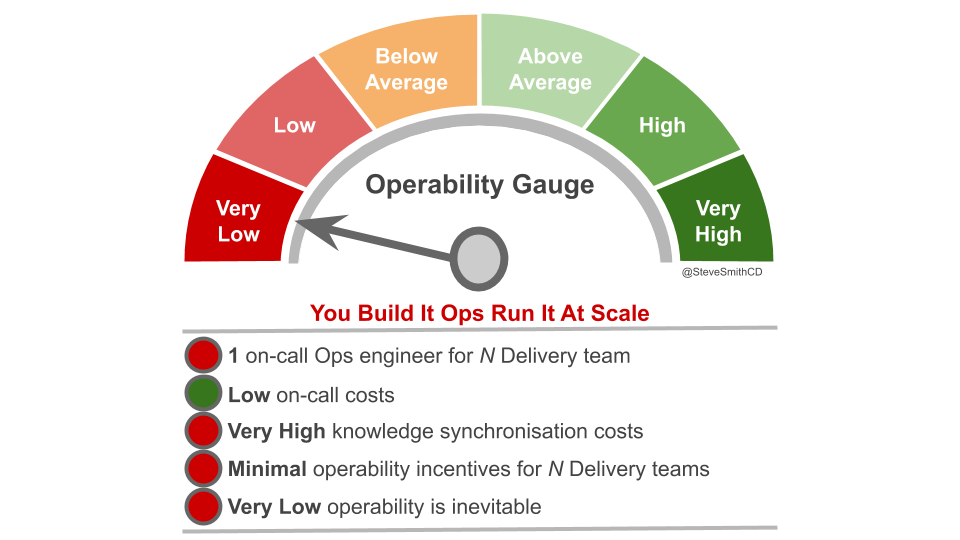

Why does Operations production support become less effective as Delivery teams and applications increase in scale?

This is part of the Who Runs It series.

Introduction

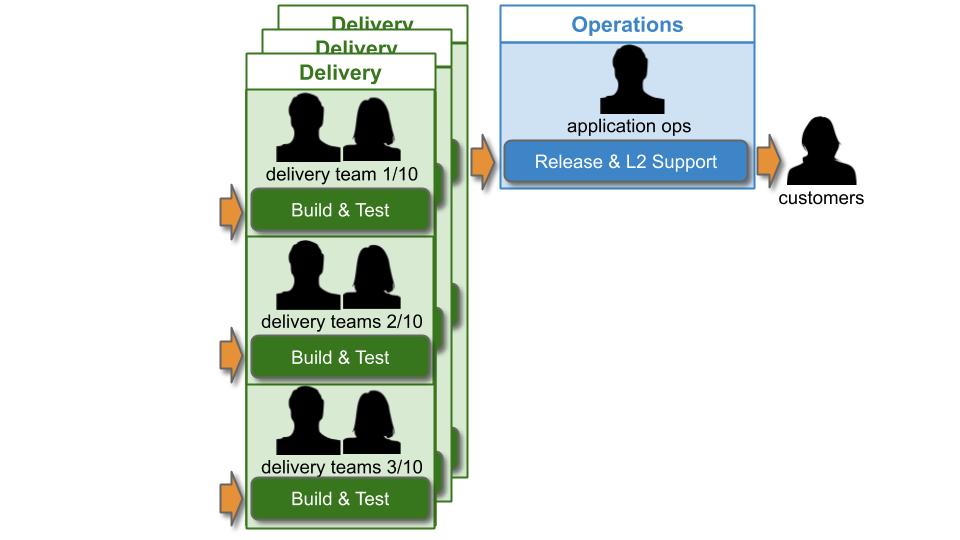

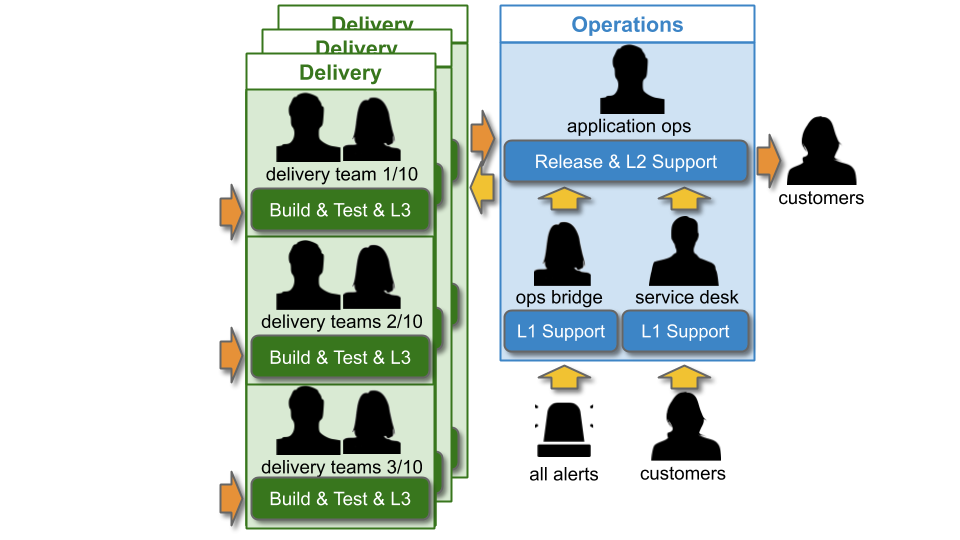

An IT As A Cost Centre organisation beholden to Plan-Build-Run will have a Delivery group responsible for building applications, and an Operations group responsible for deploying applications and production support. When there are 10+ Delivery teams and applications, this can be referred to as You Build It Ops Run It at scale. For example, imagine a single technology value stream used by 10 delivery teams, and each team builds a separate customer-facing application.

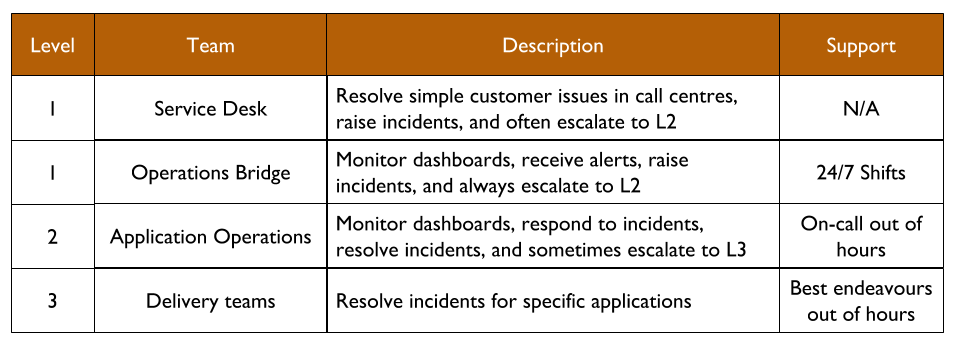

As with You Build It Ops Run It, there will be multi-level production support in accordance with the ITIL v3 Service Operation standard:

L1 and L2 Operations teams will be paid standby and callout costs out of Operational Expenditure (OpEx), and L3 Delivery team members on best endeavours are not paid. The key difference at scale is Operations workload. In particular, Application Operations will have to manage deployments and L2 incident response for 10+ applications. It will be extremely difficult for Application Operations to keep track of when a deployment is required, which alert corresponds to which application, and which Delivery team can help with a particular application.

Discontinuous Delivery and inoperability

At scale, You Build It Ops Run It magnifies the problems with You Build Ops Run It, with a negative impact on both Continuous Delivery and operability:

- Long time to restore – support ticket handoffs between Ops Bridge, Application Operations, and multiple Delivery teams will delay availability restoration on failure

- Very high knowledge synchronisation costs – Application Operations will find it difficult to ingest knowledge of multiple applications and share incident knowledge with multiple Delivery teams

- No focus on customer outcomes – applications will be built as outputs only, with little time for product hypotheses

- Fragile architecture – failure scenarios will not be designed into user journeys and applications, increasing failure blast radius

- Inadequate telemetry – dashboards and alerts from Applications Operations will only be able to show low-level operational metrics

- Traffic ignorance – applications will be built with little knowledge of how traffic flows through different dependencies

- Restricted collaboration – incident response between Application Operations and multiple Delivery teams will be hampered by different ways of working

- Unfair on-call expectations – Delivery team members will be expected to do unpaid on-call out of hours

These problems will make it less likely that application availability targets can consistently be met, and will increase Time To Restore (TTR) on availability loss. Production incidents will be more frequent, and revenue impact will potentially be much greater. This is a direct result of the lack of operability incentives. Application Operations cannot build operability into 10+ applications they do not own. Delivery teams will have little reason to do so when they have little to no responsibility for incident response.

A Theory Of Constraints lens on Continuous Delivery shows that reducing rework and queue times is key to deployment throughput. With 10+ Delivery teams and applications the Application Operations workload will become intolerable, and team member burnout will be a real possibility. Queue time for deployments will mount up, and the countermeasure to release candidates blocking on Application Operations will be time-consuming management escalations. If product demand calls for more than weekly deployments, the rework and delays incurred in Application Operations will result in long-term Discontinuous Delivery.

The Who Runs It series

- You It Build It Ops Run It

- You Build It You Run It

- You Build It Ops Run It at scale

- You Build It You Run It at scale

- You Build It Ops Sometimes Run It

- Implementing You Build It You Run It at scale

- You Build IT SRE Run It

Acknowledgements

Thanks to Thierry de Pauw.

Why do disparate Delivery and Operations teams result in long-term Discontinuous Delivery of inoperable applications?

This is part of the Who Runs It series.

Introduction

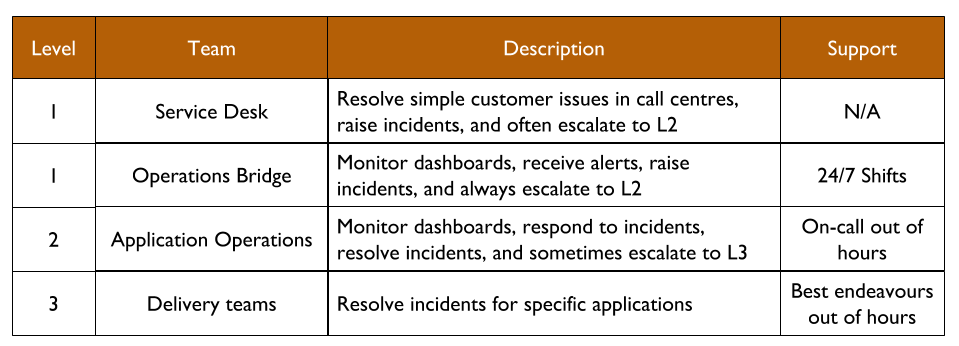

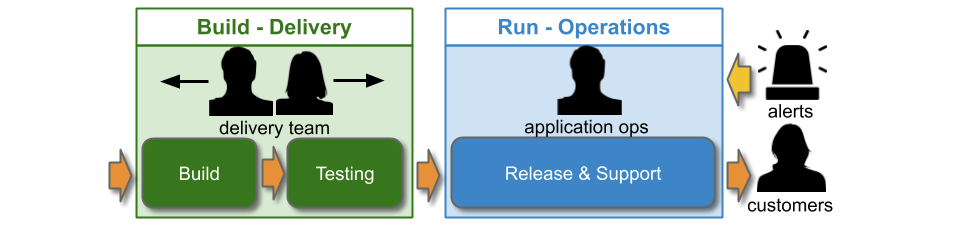

An organisation modelled on IT As A Cost Centre and Plan-Build-Run will have an Operations group in its IT department. Operations teams will be responsible for all Run activities, including deployments and production support for all applications. This can be referred to as You Build It Ops Run It. For example, consider a technology value stream comprising 1 development team in Delivery and an Application Operations team.

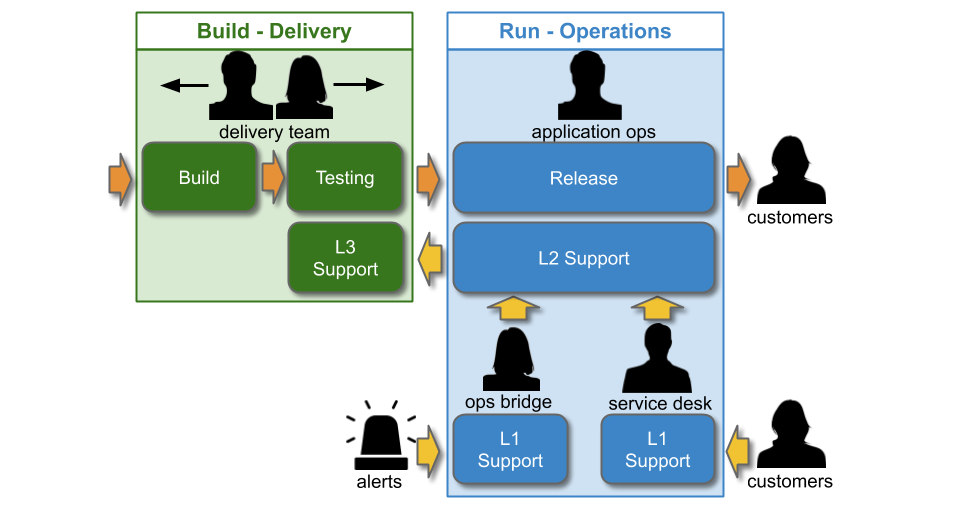

You Build It Ops Run It usually involves multi-level production support, in line with the ITIL v3 Service Operation standard:

The Service Desk will receive customer requests, and Operations Bridge will monitor dashboards and receive alerts. Both L1 teams will be trained to resolve simple technology issues, and to escalate more complicated tickets to L2. Application Operations will respond to incidents that require technology specialisation, and when necessary will escalate to an L3 Delivery team to contribute their expertise to an incident.

Cost accounting in IT As A Cost Centre creates a funding divide. A Delivery team will be budgeted under Capital Expenditure (CapEx), whereas Operations teams will be under Operational Expenditure (OpEx). An Operations team member will be paid a flat standby rate and a per-incident callout rate. A Delivery team member will not be paid for standby, and might be unofficially compensated per-callout with time off in lieu. Operations will be under continual pressure to reduce OpEx spending, and the Service Desk, Ops Bridge, and/or Application Operations might be outsourced to third party suppliers.

Discontinuous Delivery and inoperability

In ITSM and why three-tier support should be replaced with Swarming, Jon Hall argues “the current organizational structure of the vast majority of IT support organisations is fundamentally flawed”. Multi-level support in You Build It Ops Run It means non-trivial tickets will go from Service Desk or Ops Bridge through triage queues until the best-placed responder team is found. Repeated, unilateral ticket reassignments can occur between teams and individuals. Those handoffs can increase incident resolution time by hours, days, or even weeks. Rework can also be incurred as Application Operations introduce workarounds and data fixes, which await resolution in a Delivery backlog for months before prioritisation.

In addition, You Build It Ops Run It has major disadvantages for fast customer feedback and iterative product development:

- Long deployment lead times – handoffs with Application Operations will inflate lead times by hours or days

- High knowledge synchronisation costs – Delivery team application knowledge and Application Operations incident knowledge will be lost in handoffs, without substantial synchronisation efforts

- Focus on outputs – software will be built as an output, with little to no understanding of product hypotheses or customer outcomes

- Fragile architecture – applications will be architected without limits on failure blast radius, and exposed to high impact incidents

- Inadequate telemetry – dashboards and alerts created by Application Operations in isolation will only be able to use operational metrics

- Traffic ignorance – challenges involved in managing live traffic will be localised and unable to inform design decisions

- Restricted collaboration – Application Operations and Delivery teams will find joint incident response hard, due to differences in ways of working and tools, and lack of Delivery team access to production

- Unfair on-call expectations – Delivery team members will be expected to be available out of hours without compensation for the inconvenience, and disruption to their lives

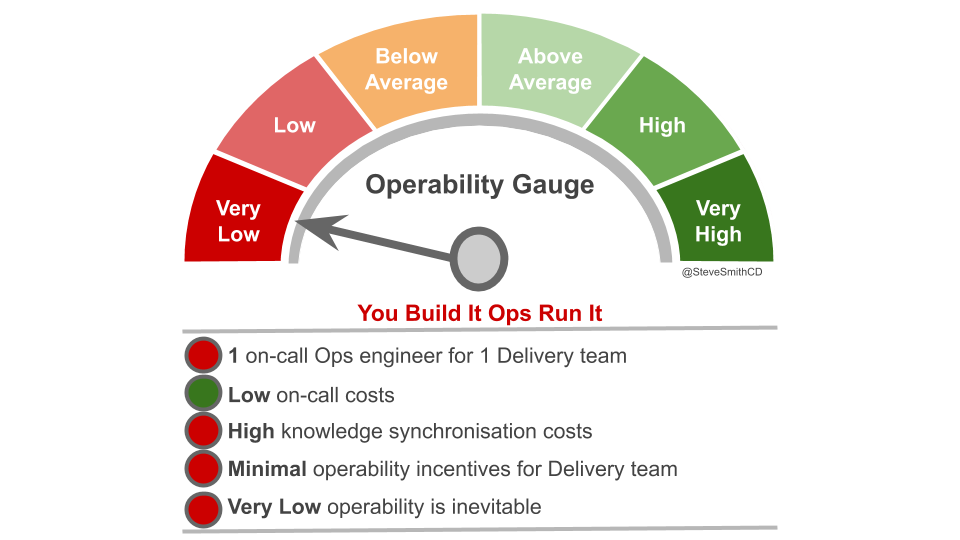

These problems can be traced back to incentives. With Application Operations responsible for production support, a Delivery team will be unaware of or uninvolved in production incidents. Application Operations cannot build operability into applications they do not own, and a Delivery team will have little reason to prioritise operational features. As a result, inoperability is inevitable.

You Build It Ops Run It injects substantial delays and rework into a technology value stream. This is likely to constrain Continuous Delivery if product demand is high. If weekly or fewer deployments are sufficient to meet demand, then Continuous Delivery is possible. However, if product demand calls for more than weekly deployments then You Build It Ops Run It can only lead to Discontinuous Delivery.

The Who Runs It series:

- You Build It Ops Run It

- You Build It You Run It

- You Build It Ops It at scale

- You Build It You Run It at scale

- You Build It Ops Sometimes Run It

- Implementing You Build It You Run It at scale

- You Build It SRE Run It

Acknowledgements

Thanks to Thierry de Pauw.

What are the different options for production support in IT as a Cost Centre? How can deployment throughput and application reliability be improved in unison? Why is You Build It You Run It so effective for both Continuous Delivery and Operability?

This series of articles describes a taxonomy for production support methods in IT as a Cost Centre, and their impact on both Continuous Delivery and Operability.

- You Build It Ops Run It

- You Build It You Run It

- You Build It Ops Run It at scale

- You Build It You Run It at scale

- You Build It Ops Sometimes Run It

- Implementing You Build It You Run It at scale

- You Build It SRE Run It

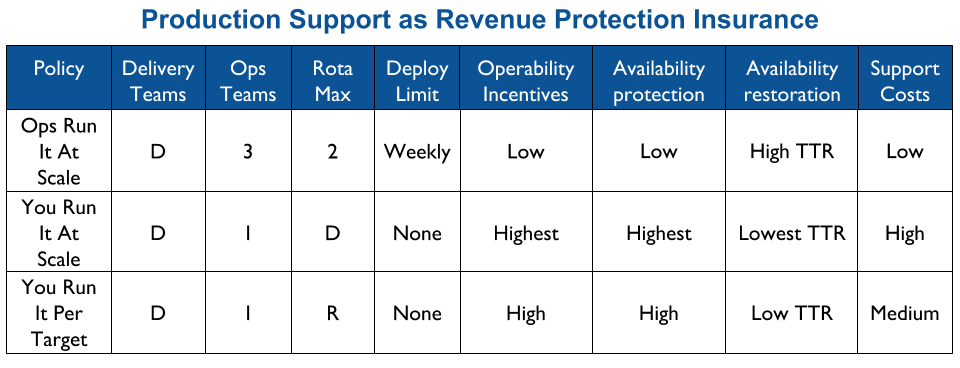

The series is summarised below. Availability targets should be chosen according to estimates of revenue loss on failure, which can be verified by Chaos Engineering or actual production incidents. There is an order of magnitude of additional engineering effort/time associated with an additional nine of availability. You Build It SRE Run It is best suited to four nines of reliability and more, You Build It You Run It is required for weekly deploys or more, and Ops Run It remains relevant when product demand is low.

I’m delighted to announce I’m joining Equal Experts as a full-time Principal Consultant and Operability Practice Lead from 1st October.

Continuous Delivery Consulting & Equal Experts

In 2014, I founded Continuous Delivery Consulting as a boutique consultancy. I wanted to use my knowledge of Continuous Delivery and Operability to help enterprise organisations transform how they deliver and operate software. Continuous Delivery and Operability can be a powerful engine for an organisation to transform itself, from what I call IT As A Cost Centre to IT As A Business Differentiator.

For the past 6 years, Continuous Delivery Consulting has partnered with Equal Experts on multiple large-scale, complex transformation programmes. I’ve had leadership roles in some notable successes:

- Platform Operations Lead for 60 teams/600 microservices in a UK government department with £500Bpa revenue.

- Digital Platform Enablement Lead, and then Continuous Delivery & Operability Strategy Lead for 30 teams/100 microservices in a high street retailer with £2Bpa website revenue.

Working with Equal Experts has always been a positive experience. I’ve been challenged to apply Continuous Delivery & Operability to multi-faceted problems affecting 10s of teams and 100s of individuals. I’ve been treated as a grown-up, and strived to respond in kind.

Now, I intend to shift my career in a different direction:

- I want a different role in enterprise organisations. I’d like to bring Product and IT people together, to help them reap the full benefits of a Digital transformation and delight their customers.

- I want to engage with CxO stakeholders. I’d like to understand their strategic problems, explain why Product and IT must be transformed simultaneously, and help them understand the power of Continuous Delivery

- I want to play a bigger role in an organisation. I’d like to work on pre-sales and marketing propositions, to collaborate with people, and play a mentoring role

The right place for me to do that is at Equal Experts.

Equal Experts

I’m a huge admirer of Equal Experts. I like how the founders Thomas Granier and Ryan Sikorsky have built up Equal Experts, particularly the flat organisational structure and the emphasis on treating clients as genuine partners. I’ve worked with some amazing people within the Equal Experts Network, and learned a great deal. And I strongly identify with the Equal Experts Values, such as delivering as a team of equals, responsible innovation, and retaining a delivery focus.

As a Principal Consultant, I’ll act as a strategic advisor to client stakeholders, and provide delivery assurance for Digital Transformation. I’ll collaborate with worldwide business units on new client opportunities, work with people teams on all aspects of recruitment and resourcing, and mentor other Equal Experts consultants.

As an Operability Practice Lead, I’ll offer thought leadership and pragmatic advice to client stakeholders on Continuous Delivery & Operability. I’ll advise clients on how to transform their software delivery and reliability engineering capabilities. I’ll also work with worldwide sales and marketing teams on the core Equal Experts proposition, and curate the Operability community within Equal Experts.

At a time where an ever-increasing number of enterprise organisations are turning towards Digital Transformation and Continuous Delivery, I’m really excited about the future of Equal Experts.

How can You Build It You Run It at scale be implemented? How can support costs be balanced with operational incentives, to ensure multiple teams can benefit from Continuous Delivery and operability at scale?

This is part of the Who Runs It series.

Introduction

Traditionally, an IT As A Cost Centre organisation with roots in Plan-Build-Run will have Delivery teams responsible for building applications, and Operations teams responsible for deployments and production support. You Build It You Run It at scale fundamentally changes that organisational model. It means 10+ Delivery teams are responsible for deploying and supporting their own 10+ applications.

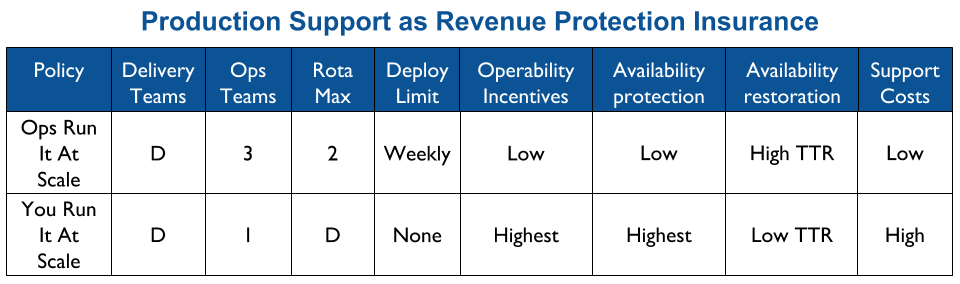

Applying You Build It You Run It at scale maximises the potential for fast deployment lead times, and fast incident resolution times across an IT department. It incentivises Delivery teams to increase operability via failure design, product telemetry, and cumulative learning. It is a revenue insurance policy, that offers high risk coverage at a high premium. This is in contrast to You Build It Ops Run It at scale, which offers much lower risk coverage at a lower premium.

You Build It You Run It at scale can be intimidating. It has a higher engineering cost than You Build It Ops Run It at scale, as the table stakes are higher. These include a centralised catalogue of service ownership, detailed runbooks, on-call training, and global operability measures. It can also have support costs that are significantly higher than You Build It Ops Run It at scale.

At its extreme, You Build It You Run It at scale will have D support rotas for D Delivery teams. The out of hours support costs for D rotas will be greater than 2 rotas in You Build It Ops Run It at scale, unless Operations support is on an exorbitant third party contract. As a result You Build It Ops Run It at scale can be an attractive insurance policy, despite its severe disadvantages on risk coverage. This should not be surprising, as graceful extensibility trades off with robust optimality. As Mary Patterson et al stated in Resilience and Precarious Success, “fundamental goals (such as safety) tend to be sacrificed with increasing pressure to achieve acute goals (faster, better, and cheaper)”.

You Build It You Run It at scale does not have to mean 1 Delivery team on-call for every 1 application. It offers cost effectiveness as well as high risk coverage when support costs are balanced with operability incentives and risk of revenue loss. The challenge is to minimise standby costs without weakening operability incentives.

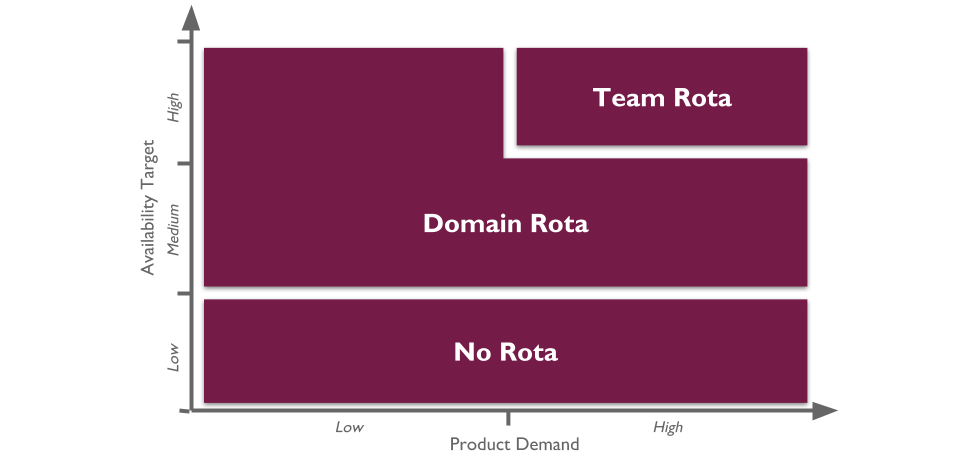

By availability target

The level of production support afforded to an application in You Build It You Run It at scale should be based on its availability target. In office hours, Delivery teams support their own applications, and halt any feature development to respond to an application alert. Out of hours, production support for an application is dictated by its availability target and rate of product demand.

Applications with a low availability target have no out of hours support. This is low cost, easy to implement, and counter-intuitively does not sacrifice operability incentives. A Delivery team responsible for dealing with overnight incidents on the next working day will be incentivised to design an application that can gracefully degrade over a number of hours. No on-call is also fairer than best endeavours, as there is no expectation for Delivery team members to disrupt their personal lives without compensation.

Applications with a high availability target and a high rate of product demand each have their own team rota. A team rota is a single Delivery team member on-call for one or more applications from their team. This is classic You Build It You Run It, and produces the maximum operability incentives as the Delivery team have sole responsibility for their application. When product demand for an application is filled, it should be downgraded to a domain rota.

Applications with a medium availability target share a domain rota. A domain rota is a single Delivery team member on-call for a logical grouping of applications with an established affinity, from multiple Delivery teams.

The domain construct should be as fine-grained and flexible as possible. It needs to minimise on-call cognitive load, simplify knowledge sharing between teams, and focus on organisational outcomes. The following constructs should be considered:

- Product domains – sibling teams should already be tied together by customer journeys and/or sales channels

- Architectural domains – sibling teams should already know how their applications fit into technology capabilities

The following constructs should be rejected:

- Geographic domains – per-location rotas for teams split between locations would produce a mishmash of applications, cross-cutting product and architectural boundaries and increasing on-call cognitive load

- Technology domains – per-tech rotas for teams split between frontend and backend technologies would completely lack a focus on organisational outcomes

A domain rota will create strong operability incentives for multiple Delivery teams, as they have a shared on-call responsibility for their applications. It is also cost effective as people on-call do not scale linearly with teams or applications. However, domain rotas can be challenging if knowledge sharing barriers exist, such as multiple teams in one domain with dissimilar engineering skills and/or technology choices. It is important to be pragmatic, and technology choices can be used as a tiebreaker on a product or architectural construct where necessary.

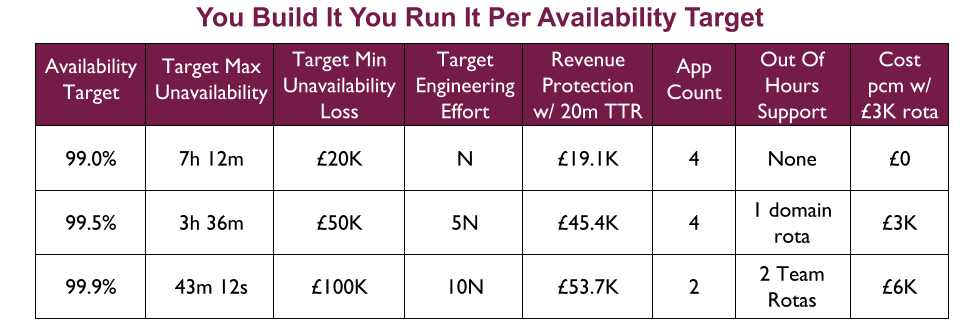

For example, a Fruits R Us organisation has 10 Delivery teams, each with 1 application. There are 3 availability targets of 99.0%, 99.5%, and 99.9%. An on-call rota is £3Kpcm in standby costs. If all 10 applications had their own rota, the support cost of £30Kpcm would likely be unacceptable.

Assume Fruits R Us managers assign minimum revenue losses of £20K, £50K, and £100K to their availability targets, and ask product owners to consider their minimum potential revenue losses per target. The Product and Checkout applications could lose £100K+ in 43 minutes, so they remain at 99.9% and have their own rotas. 4 applications in the same Fulfilment domain could lose £50K+ in 3 hours, so they are downgraded to 99.5% and share a Fulfilment domain rota across 4 teams. 4 applications in the Stock domain could lose £20K in 7 hours but no more, so they are downgraded to 99.0% with no out of hours on-call. This would result in a support cost of £9Kpcm while retaining strong operability incentives.

Optimising costs

A number of techniques can be used to optimise support costs for You Build It You Run It Per Availability Target:

- Recalibrate application availability targets. Application revenue analytics should regularly be analysed, and compared with the engineering time and on-call costs linked to an availability target. Where possible, availability targets should be downgraded. It should also be possible to upgrade a target, including fixed time windows for peak trading periods

- Minimise failure blast radius. Rigorous testing and deployment practices including Canary Deployments, Dark Launching, and Circuit Breakers should reduce the cost of application failure, and allow for availability targets to be gradually downgraded. These practices should be validated with automated and exploratory Chaos Engineering on a regular basis

- Align out of hours support with core trading hours. A majority of website revenue might occur in one timezone, and within core trading hours. In that scenario, production support hours could be redefined from 0000-2359 to 0600-2200 or similar. This could remove the need for out of hours support 2200-0600, and alerts would be investigated by Delivery teams on the following morning

- Automated, time-limited shuttering on failure. A majority of product owners might be satisfied with shuttering on failure out of hours, as opposed to application restoration. If so, an automated shutter with per-application user messaging could be activated on application failure, for a configurable time period out of hours. This could remove the need for out of hours support entirely, but would require a significant engineering investment upfront and operability incentives would need to be carefully considered

This list is not exhaustive. As with any other Continuous Delivery or operability practice, You Build It You Run It at scale should be founded upon the Improvement Kata. Ongoing experimentation is the key to success.

Production support is a revenue insurance policy, and implementing You Build It You Run It at scale is a constant balance between support costs with operability. You Build It You Run It Per Availability Target ensures on-call Delivery team members do not scale linearly with teams and/or applications, while trading away some operability incentives and some Time To Restore – but far less than You Build It Ops Run It at scale. Overall, You Build It You Run It Per Availability Target is an excellent starting point.

The Who Runs It series:

- You Build It Ops Run It

- You Build It You Run It

- You Build It Ops Run It at scale

- You Build It You Run It at scale

- You Build It Ops Sometimes Run It

- Implementing You Build It You Run It at scale

- You Build It SRE Run It

Acknowledgements

Thanks to Thierry de Pauw.